Other Questions

The Speed of Light

Setterfield: from Foundations of Physics, by Lehrman and p. 510-511:

In other words, the light is propelled by the changing electric and magnetic fields. The speed at which the light travels is dependent upon the electric-elastic property of space and the magnetic-inertial property of space. These properties are respectively called the permittivity and the permeability of space.

Setterfield: If the electric and magnetic properties of free space alter, the speed of the wave will also change. The electric and magnetic properties of free space are determined by the zero point energy which fills all space. If there is a change in the strength of the zero point energy at any point in space, the speed of light will also change at that point. If zero point energy increases, the speed of light decreases. There is a helpful way of looking at this. Because energy exists in free space, and since Einstein’s equation shows that matter and energy are inter-convertible, the zero point energy allows the manifestation of what are called ‘virtual particle pairs.’ The greater the strength of the zero point energy, the more the number of these virtual particle pairs in any given volume of space at any given moment. These virtual particle pairs act as obstacles to the progress of a photon of For a more detailed laymen’s explanation here, please see the Basic Summary written by my wife.

Setterfield: The presence or absence of virtual particle pairs determines the speed of light in space. What this means, however, is their presence, or lack of it, can make the 'thickness' of space different. You can see an example of what this does to the speed of light by sticking a straw into a glass of water. The straw appears 'broken' at the surface of the water, right? That is because the light going through water is going through a thicker, or denser, medium than when it goes through air, so it is traveling a bit more slowly. Virtual particles in space do the same thing to light -- the more of them there are, the longer it takes light to reach its final destination.

Setterfield: At any one time, assuming the properties of the vacuum are uniform, yes, the speed of light is constant in a vacuum. However there something called the Zero Point Energy, or ZPE, (check the ZPE section in Discussions for further information on this) which produces something called virtual particles which flash in and out of existence and they DO slow down the arrival of light at its final destination. So any change in the ZPE out there in the vacuum will change the speed of light. However changes in the ZPE occur throughout the universe at the same time, except for some relatively small local secondary effects -- which I am becoming convinced are connected with gravity as we know it.

Setterfield: The answer to this “missing helium” problem is being sought by both evolutionists and creationists. This may surprise you since the problem that evolutionists face might not be expected to be a problem to creationists. However, the creationist RATE group, which is studying the whole problem of radioactive decay and radiometric dating, is slowly swinging around to the idea of accelerated rates of radioactive decay, at least during certain specific periods of earth history, such as the time of the Flood. The model that is developing from the RATE group is “a short burst of high-rate radioactive decay and helium production, followed by 6000 years of He diffusion at today’s temperatures in the formation” [D. R. Humphreys in “Radioisotopes and the Age of the Earth”, L. Vardiman et al Editors, p.346, ICR/CRS 2000]. The model that Humphreys and his RATE associates are working on envisages that “since Creation, one or more episodes occurred when nuclear decay rates were billions of times greater than today’s rates. Possibly there were three episodes: one in the early part of the Creation week, another between the Fall and the Flood, and the third during the year of the Genesis Flood” [Humphreys, op. cit., p.333]. No reason is ever given for this change in the decay rates except for it simply being a miracle of God. While I don't disagree with the existence of miracles, it seems there is a basic physical cause for this change which was not a matter of appearing suddenly two or three different times, but rather of having decreased at a specific rate for a specific time and reason. Their position contrasts with my proposition of diminishing decay rates following the form of the redshift/lightspeed curve over a somewhat more extended period. NOTE: In 2003, the RATE group published an excellent paper on the helium question which may be found here. My comments on this paper are below. However, the effects of this accelerated decay, no matter which of these two models is being used, is similar. Effectively, there has been a high production rate of helium in the earlier days of the earth which should yield higher amounts of helium in our atmosphere than observed at the moment. By contrast, the old creationist position was that radiometric dates were basically in error for one reason or another and that radioactive decay at today’s rate was all that existed over the last 6000 years. The result of that old creationist position was that there was no case to answer on the helium question, since none was “missing”. This older position was the one that led to the missing helium being an effective argument for a young earth. In his article in the above book by the RATE group, Humphreys indicates where some of the original thinking on this issue was incorrect through failure to note the evidence from the data. As a result of further investigation, recent creationist research has indicated that the situation may not be as straightforward as the older model had suggested. This result then leads directly to the question that you posed. Where is the “missing” helium? The brief answer is that it is still locked up in the interior of the earth and in the rocks in the earth’s crust. Let me explain a little further. In 1999, an assessment of data collected in 1997 indicated that the bottom 1000 km of the earth’s mantle contained anomalous reservoirs of heat producing elements [“Researchers propose a new model for earth mantle convection,” MIT News Release, 31 March 1999. This is discussed in more detail in the appendix of A Brief Stellar History]. In other words, there is a layer in the earth’s interior where the radioactive elements were concentrated, and from which some have come to reside in the crust as a result of ongoing geological processes. This means that the majority of helium must still be within the earth’s mantle, not having had enough time to work its way to the surface on the contracted timescales offered by both the RATE and Setterfield models. However, that does not account for the radioactive material which has come to reside in the crust of the earth. Where is the helium emitted by radioactive decay from that source? The possible answer is a surprise. It had been noted in 1979 by R. E. Zartman in a Los Alamos Science Laboratory Report Number LA-7923-MS that zircons from deep boreholes below the Jemez volcanic caldera in New Mexico gave an age for the granitic basement complex there of about 1.5 billion atomic years. However, when zircons from this complex were analyzed by Gentry et al in 1982, they were found to contain very large percentages of helium, despite the hot conditions [R.V. Gentry, G.L. Glish and E.H. McBay “Differential helium retention in zircons…” Geophysical Research Letters Vol.9 (1982), p.1129]. In other words, these zircon crystals had retained within them the radioactive decay products of almost 1.5 billion atomic years, without very much diffusing out. Humphreys concludes that one of the strongest pieces of evidence for accelerated decay rates over a relatively short timespan is this high retention of radiogenic helium in microscopic zircons [op.cit., p.344, 350]. I find myself in agreement with this assessment. Therefore the answer to your question based on these assessments is that (1) radiogenic helium is trapped in the interior of the earth, not having had sufficient time to be brought to the surface from the radioactive layer that exists at a depth of about 1700 km, while (2) much of that which was brought up to the crust has not diffused out from the host rocks, again because of insufficient time and also because of much lower diffusion rates than previously anticipated. This position has at least some experimental data to support it.

Comments on the RATE paper, Helium Diffusion Rates Support Accelerated Nuclear Decay Setterfield: The paper by Humphreys, Baumgardner, Austin and Snelling on helium diffusion rates in zircons from the Jemez Granodiorite is an important contribution to the debate on the missing helium in our atmosphere. The Jemez Granodiorite can be found on the west flank of the volcanic Valles Caldera near Los Alamos, New Mexico, and has a radiometric date of 1.5 billion years. That places this granodiorite in the Precambrian Era geologically. As Humphreys et al report in their Abstract, “Up to 58% of the helium (that radioactivity would have generated during the … 1.5 billion year age of the granodiorite) was still in the zircons. Yet the zircons were so small that they should not have retained the helium for even a tiny fraction of that time. The high helium retention levels suggested to us…that the helium simply had not had enough time to diffuse out of the zircons…” If indeed there was not enough time for 1.5 billion atomic years worth of helium from radioactive decay the helium to diffuse out of the zircons, this implied two things. First that the radioactive decay rate must have been higher in the past, and second, that the Precambrian rocks in which they were found had an actual age much less than the atomic or radiometric age would suggest. Indeed, the authors state that the data “limit the age of these rocks to between 4,000 and 14,000 years.” The analysis by these authors was mainly focused on zircon crystals from the granodiorite. As they point out, zircon has a high hardness, high density, and high melting point. It is also important to note that uranium and thorium atoms can replace up to 4% of the normal zirconium atoms in any given crystal. In all, seven samples were used in their analysis and were obtained from depths ranging up to 4310 metres and temperatures up to 313 degrees Celsius. An important conclusion became apparent to the authors immediately. They state: “Samples 1 through 3 had helium retentions of 58, 27 and 17 percent. The fact that these percentages are high confirms that A LARGE AMOUNT OF NUCLEAR DECAY did indeed occur in the zircons [their emphasis]. Other evidence strongly supports much nuclear decay having occurred in the past….We emphasize this point because many creationists have assumed that “old” radioisotopic ages are merely an artifact of analysis, not really indicating the occurrence of large amounts of nuclear decay. But according to the measured amount of lead physically present in the zircons, approximately 1.5 billion years worth – at today’s rates – of nuclear decay occurred. Supporting that, sample 1 still retains 58% of all the alpha particles (the helium) that would have been emitted during this decay of uranium and thorium to lead.” This statement is a pleasing development, and makes a refreshing change to some creationist approaches. A glance at the analysis figures indicates that the helium retention levels decrease as the rock temperature increases. Another important component is the diffusion rates of the minerals surrounding the zircons, which are frequently biotite or muscovite, both forms of mica. Analysis disclosed that, in the temperature range of interest, these micas had a somewhat higher diffusion rate than the zircons. In other words, the material surrounding the zircons did not impede the outflow of helium as much as the zircons did. The analysis concluded that “the observed diffusion rates are so high that if the zircons had existed for 1.5 biollion years at the observed temperatures, samples 1 through 5 would have retained MUCH LESS HELIUM THAN WE OBSERVE [their emphasis]. This strongly implies they have not existed for nearly so long a time…In the meantime we can say the data of Table 4, considering the estimates of error, indicate an age between 4000 and 14,000 years. This is far short of the 1.5 billion year uniformitarian age!” The final section of the paper considered four alternative explanations for the data, but the authors were able to dismiss most of them readily. The first of these was the assertion that temperatures in the Jemez Granodiorite before the Pliocene-Pleistocene volcanism were low enough to make the diffusion coefficients small enough to retain the helium. The analysis of this contention concluded that for this to happen, “the pre-Pliocene temperature in the granodiorite would have to have been about -100 degrees Celsius, near that of liquid xenon…[This] demonstrates how zircons would need unrealistically low temperatures to retain large amounts of helium for … eons of time.” A second line of defense might be to claim that the helium 4 concentration in the surrounding rock is presently about the same as in the zircons. However, the authors point out that the measured values of the helium concentration in the surrounding biotite is much lower than in the zircons. Thus helium must still be diffusing out of the zircons into the biotite. In addition, they state “the Los Alamos geothermal project made no reports of large amounts of helium (commercially valuable) emerging from the boreholes, thus indicating that there is not much free helium in the formation as a whole.” A third objection might be to claim that the team involved made a huge mistake, and that the actual amounts of helium were really many orders of magnitude smaller than reported. A discussion in their Appendix C indicates otherwise, as also does the fact that similar data have been obtained by others from that and other formations. The authors took some trouble to refute the fourth possibility. Basically, that possibility makes use of the geoscience concept of a ‘closure temperature’ to claim that zircons below that temperature are permanently closed systems. Thus no significant helium would be lost by diffusion and the high helium content of zircons would thereby be explained. The authors respond in the following terms: “After the zircon cools below the closure temperature, helium begins to accumulate in it…Later, as the temperature levels off to that of the surrounding rock, the diffusion coefficient becomes constant…As the amount of helium in the zircon increases, Fick’s laws of diffusion (sect.3)say the loss rate increases. Eventually, even well below the closure temperature, the loss rate approaches the production rate, an event we call the ‘reopening’ of the zircon….If the closure interval were long compared to the age of the zircon, then the zircon would indeed be a closed system. But [the data] gives us values [for the closure interval] between a few dozen years and a few thousand years depending on the temperature of the sample in the borehole. Those times are very small compared to the uniformitarian age of 1.5 billion years…Thus the closure temperature does not help uniformitarians in this case, because the closure interval is brief.” If this new approach to the fourth problem holds up to closer scrutiny, then the authors have a strong case for their conclusion, namely that “The data and our analysis show that over a billion years worth of nuclear decay have occurred very recently, between 4,000 and 14,000 years ago…[Consequently] helium diffusion casts doubt on uniformitarian long-age interpretations of nuclear data and strongly supports the young world of Scripture.” The groundbreaking research is of great value to the science community as a whole, not just the creation community. We need to be grateful for the work these men have put into this research.

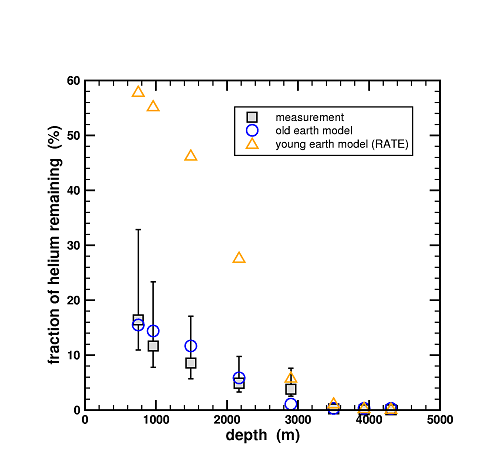

Setterfield: I was asked if I had responded to or “solved” the problem raised by Russ Humphreys in relation to Precambrian rocks. In one of Humphreys’ replies he specifically mentioned the problem of Helium retention in zircons. He claims that the RATE zircon crystal data show high retention rates of radiogenically produced helium in the zircons after the uranium atoms in them had decayed. The actual radiometric age of the zircons was 1.5 billion years or so. Therefore, the argument goes that if the rocks with the zircons were really 1.5 billion years old, then all of the radiogenic helium would have diffused out of them and none would be left. The RATE data, which showed some helium retention, were then used to conclude that we had a young geological column which underwent several huge spikes in radioactive decay rates during the last 6000 years. In other words, the RATE group claimed that “helium diffusion age” was in keeping with a young cosmos only 6000 years old, while the radiometric age from the decay of uranium atoms of 1.5 billion was thereby unreliable. Humphreys suggested to the correspondent that these data also disprove our Zero Point Energy (ZPE) Model. He did this on the basis that a lower ZPE in the past meant not only higher radioactive decay rates, but also proportionally higher diffusion rates. As a result, in the same way that standard science would expect little helium to be retained in the zircons because of billions of (atomic) years of diffusion, so also the ZPE model would expect similarly small amounts of helium to be retained because of enhanced diffusion processes over a much shorter time in actual years. Humphreys then states that the fact that more helium atoms than expected are retained in the zircons proves that the ZPE approach is wrong. The implication is that only the RATE interpretation is correct. There are several problems with Humphreys’ proposition. First, let us look at a general principle. It is accepted that diffusion processes occur because of pressure differences due to differences in concentrations of the atoms involved. If the pressure of the gas of helium atoms inside the zircon crystal is greater than that outside, they will migrate (or diffuse) out. Conversely, if the pressure of helium atoms (a gas) outside is greater than inside the zircon crystal, then the helium will tend to migrate into the crystal. Alternately, if the pressure of the helium atom gas outside the crystal is the same as inside the crystal, migration or diffusion of helium atoms will not occur. These concepts are enshrined in Fick’s 1st Law which states that “Steady state diffusion is proportional to the concentration gradient.” This is relevant in the case in hand since it is true that the ZPE model can have some enhanced diffusion processes over a fairly short time. Under ordinary circumstances, this would allow most helium atoms to migrate out of the zircon crystal. However, unlike the standard model, the ZPE model has the rates of radioactive decay systematically enhanced, but not in two or three “spikes” as the RATE model has. As a result, another factor is also operating. In the rock in which the zircon crystals occurred, there would have been large amounts of uranium whose continuing enhanced decay gave off a significant number of helium atoms as a by-product. In a word, the zircon crystals throughout the rock in question would have been immersed in a “bath” of helium atoms. This helium, external to the zircons, would present a much higher concentration than currently existing for an extended period. Therefore the diffusion rate of helium from within the zircon crystals would have been significantly lower or nonexistent in the early days of the rock. For this reason alone, higher than expected concentrations of helium would occur on the ZPE model in a manner not applicable to the RATE model. Second, examination of the RATE model shows it has at least 5 flaws in either the data analysis or the modeling. Let me mention a couple. When temperatures are high, the diffusion rate tends to increase. Alternately, when the temperatures are low, the diffusion rate is low. To get their required result, the RATE group assumed that diffusion occurred at a constant temperature throughout the entire period. This is unrealistic to say the least. A model of systematically dropping temperatures may have been more appropriate. Even better would be one with an early drop followed by a variable temperature dependent upon geologic conditions. This would also be appropriate in the case of the ZPE model. In this case, the lower ZPE strength in the early universe also means different vacuum properties and different heat flow rates occurred which may have allowed for more rapid cooling of heated rock. As a result, lower than expected temperatures may have prevailed. This has a bearing on the next problem noted with the RATE analysis. The problem is this. At low temperatures, most helium atoms migrate away from the crystal only at the point where there are defects in the crystal structure. This means that all the helium atoms elsewhere in the crystal tend to remain trapped in. They will only move if the temperature is raised sufficiently high. So there are several different “populations” or “domains” of helium atoms to contend with. The RATE group did not even consider this in their approach, but rather used a very simple kinetic model. Dr. Gary Loechelt has pointed out that these two items alone invalidate many RATE conclusions. His own paper of September 2008 with its extensive analysis demonstrates this. A third point has been made by Loechelt. He has noted that the RATE analysis took Gentry’s estimate for the total amount of helium produced from nuclear decay. This estimate is now known to be off by a factor of over three. Once this error is corrected, the amount of helium retained in the analysis would drop significantly. The outcome of all this is that the revised old earth model of Loechelt is in close agreement with the data and a better fit to that data than the RATE model. He produced this graph:

Others have severely criticized Humphreys analysis on similar and other grounds. The outcome of all this is that subsequent discussions have shown that Humphreys placed too much confidence in the RATE analysis. As a result of this and other misconceptions, he drew some incorrect conclusions about our ZPE model, which is in accord with the data. I therefore suggest that your correspondent re-examine the situation and revise his own conclusions accordingly.

Setterfield: As far as radioactive heating turning the earth into a plasma is concerned, this is also incorrect. First, the Genesis 1:2 record indicates that the earth started off in a cold state with an ocean covering the surface. This contrasts with current astronomical paradigms, but I consider that we have an eyewitness account here. If we that this as our starting point, plus the more rapid rate of radioactive decay. It is currently accepted that there was/is a region in the earth's mantle from about 1700 km down to a depth of 2700 km which has the radioactive elements concentrated in it. These elements came to be incorporated in the crust by later geological processes. On this basis, calculation shows that the temperature of the core today, some 7200 years later would be about 5800 degrees with 1900 degrees now at the top of the lower mantle. More information on this is available in Behavior of the Zero Point Energy and Atomic Constants in appendices 2 and 4.

Setterfield: In brief, biological processes remain essentially unaffected by variations in lightspeed, c. If the old collision theory of reactions was true, all reactions would proceed to the point of completion in a fraction of a second, even with the current speed of light. However, it can be shown that most reactions are controlled by a rate- determining step involving an activated complex. Reaction with the activated complex is dependent upon both the number of particles or ions approaching it, and the time these particles spend in the vicinity of the activated complex. As the physics of that step are worked through, it can be shown that even though there may be more ions come within reaction distance of the activated complex, proportional to c, the time that they spend in its vicinity is proportional to 1/c, so that the final result is that reaction times are unchanged with higher c values. Consequently, brain processes, muscular contractions, rates of growth, time between generations, etc. all would occur at the same rate with higher c as they do today. 2012 Addendum -- further research has added a lot more information here. Please see this section of Behavior of the Zero Point Energy and Atomic Constants. In addition Research on Fossil Gigantism is also yielding clues.

Setterfield: The energy of a photon E is given by [hf] or [hc/w] where h is Planck's constant, f is frequency, w is wavelength, and c is light-speed. Two situations exist. First, for LIGHT IN TRANSIT through space. As light-speed drops with time, h increases so that [hc] is a constant. It should be emphasised that frequency, [f], is simply the number of wave-crests that pass a given point per second. Now wavelengths [w] in transit do not change. Therefore, as light-speed c is dropping, it necessarily follows that the frequency [f] will drop in proportion to c as the number of wave-crests passing a given point will be less, since [c = fw]. Since [f] is therefore proportional to c, and [h] is proportional to [1/c], it follows that [hf] is a constant for light in transit. Since both [hc] and [w] are also constants for light in transit, this means that [hc/w] and [hf] do not alter. In other words, for light in transit, E the energy of a photon is constant, other factors being equal. The second situation is that pertaining at the TIME OF EMISSION. When c is higher, atomic orbit energies are lower. This happens in a series of quantum steps for the atom. Light-speed is not quantised, but atomic orbits are. As light-speed goes progressively higher the further we look back into space, so atomic orbit energies become progressively lower in quantum steps. This lower energy means that the emitted photon has less energy, and therefore the wavelength [w] is longer (redder). This lower photon energy is offset by proportionally more photons being emitted per unit time. So the total energy output remains essentially unchanged. As a result of these processes at emission, light from distant galaxies will appear redder (the observed redshift), but there will be more photons so distant sources will appear to be more active than nearby ones. Both of these effects are observed astronomically.

Setterfield: Thank you for your comments and question. Let me see if I can answer your points satisfactorily You point out that some claim that a higher value for lightspeed would affect biological processes. This is based on the fact that some atomic processes are faster when lightspeed is higher. However, as is shown on our website in Behavior of the Zero Point Energy and Atomic Constants, in this section, this does not affect reaction rates, and hence biological processes remain unchanged. This is the case because energy is being conserved throughout any changes in the speed of light. In turn, this means that other associated atomic constants are also varying in a way that mutually cancel out any untoward effects. Another way of looking at it is to see biological processes as occurring at the level of molecules rather than at the subatomic level. At the molecular level, the basic quantity that is governing effects is the electronic charge. This has remained constant throughout time and so that molecular interactions remain unchanged.

Setterfield: In response to this, there has been a recent article pointing out that in the early earth all radioactive elements were contained within a narrow band some 2000 km below the surface of the earth, in the mantle. I think mention is made of this in "A Brief Earth History", and the reference is in there. The more rapid rate of decay earlier in history, particularly in antediluvian times, will mean that there was a greater flux of neutrons. However, these were confined to the earth's interior prior to the Flood. It is only as a result of the Flood processes that some of this material was lifted to the surface, thereby becoming dangerous. By this time, the speed of light had dropped dramatically, thereby also dramatically decreasing the neutron flux. There is another point to consider as well. When the speed of light was higher, the half-life of the released neutron was also much shorter. Therefore the distance traveled by the free neutrons before decay would be the same as today at a maximum, and possibly much less. This would therefore restrict the range over which any potential damage to living tissue could be done. In the final part of question 4, you mention that the interaction of neutrons with Nitrogen 14 may also have been less when the speed of light was higher. This does have implications for the build-up of Carbon 14 in our atmosphere. I hope this helps.

Setterfield: I think that you are misunderstanding something here. When lightspeed is higher, any lump of radioactive matter will decay more quickly. If neutrons are involved, then with a higher decay rate there will also be more of them. However, the only material emitting neutrons on the early earth was the band of radioactive material deep inside the earth's mantle. There the flux of neutrons was greater, BUT since it was deep inside the earth, these neutrons never reached the surface and so no living tissue was damaged by them. It was due to the Flood process that this material started to be brought to the surface and cause damage. This is one likely reason why the lifespan of the patriarchs dropped dramatically after the Flood and after the continental division in the days of Peleg. In each case, there was a bottleneck in the population and in each case there was a significant amount of radioactive material brought to the surface which probably did genetic damage. The additional point I want to mention involves nitrogen 14, neutrons, and the production of carbon 14. Nitrogen 14 interacts with atmospherically produced neutrons to form C14 and a proton. The question in this case is the origin of these atmospheric neutrons. Their production is dependent entirely on the number of cosmic rays impinging on our atmosphere from the depths of space. An additional consideration is the number of solar flares which produce magnetic storms that affect the earth's magnetic field. This magnetic field shields the earth from some cosmic rays by deflecting them away from the planet. As a consequence, the earth's magnetic field plays a part in the process of neutron production. But the prime unknown in the whole neutron production scenario is the flux of cosmic rays from space. We need to look at this. (I might mention that there is a significant body of research indicating that the magnetic field of the earth was stronger in the past. If this is true, then more cosmic rays would have been deflected, actually helping life on earth to thrive.) By April of 2002 it was established that there are two sources for cosmic rays, and that nearly 90% of cosmic rays were high speed protons, about 9% were alpha particles (helium nuclei) and about 1% were electrons with about 0.25% being nuclei of other elements. The high energy cosmic rays appear to originate in the cores of distant galaxies where quasars once shone out with super brilliance. During the initial period when the cores of galaxies were active quasars, the massive radiation produced would dampen cosmic ray acceleration, thereby sapping most of their energy. It was only when the quasar became dormant around the remaining super-massive black hole that the environment was favourable for the production of high energy cosmic rays. High energy cosmic rays have been shown to be associated with 4 nearby elliptical galaxies with spinning super-massive black holes containing at least 100 million solar masses. Elihu Boldt from NASA's Goddard Space Flight Centre reported their findings at a joint meeting of the American Physical Society and the American Astronomical Society in Albuquerque [Science News, 27 April, 2002 in a review by Ron Cowan]. Under these circumstances, it becomes apparent that high energy cosmic rays were not produced in the early cosmos but became prominent some significant length of time later. Neutrons from that source would thus not be part of the early earth's atmospheric envelope. Radiation damage caused by neutrons from high energy cosmic rays would thereby be minimal, as would be the production of C14 in the early days of earth history. A second source of cosmic rays lies within our own galaxy and produces the low energy variety. The findings of a second study published in Nature for 25 April, 2002 by Enomoto and his colleagues reported that cosmic rays have for the first time been positively linked to a supernova remnant. It had been known for some time that calculations indicated that supernova remnants were the only possible environment for these particles to be accelerated to high enough velocities apart from super-massive black holes. The supernova remnant is a shell of material blown out from a central star that has exploded. Shock waves traveling through this material can accelerate cosmic ray particles up to the required energies. But that is not the end of the story. The tangled web of magnetic fields within our galaxy (and typically for all galaxies) traps (and sometimes accelerates) the low energy cosmic ray particles within the galaxy. Thus the numbers of low energy cosmic rays build up with time rather like water being trapped in a dam on a river. As a consequence, the low energy cosmic ray flux becomes greater with time. In addition to this, the number of supernova remnants also builds up with time. It would therefore appear that the production of neutrons in the earth's atmosphere by low energy cosmic rays would become greater with time, and that the production of C14, and radiation damage from that source would be minimal in the early days of earth history.

Does the Universe Show False Maturity?

Setterfield: Inherent within the redshift data for cDK is an implied age for the cosmos both on the atomic clock and on the dynamical or orbital clock. These ages are different because the two clocks are running at different rates. The atomic clock runs at a rate that is proportional to light speed, and can be assessed by the redshift. Originally this clock was ticking very rapidly, but has slowed over the history of the universe. By contrast, the dynamical or orbital clock runs at a uniform rate. The atomic clock, from the redshift data, has ticked off at least 15 billion atomic years. By contrast, the orbital clock, since the origin of the cosmos, has ticked off a number of years consistent with the patriarchal record in the Scriptures. (1/29/99) For further material on sedimentation and the early earth, please see "A Brief Earth History".

Setterfield: Yes, I guess it's theoretically possible to alter the characteristics of the vacuum locally in order to increase the speed of light, and this may have implications and applications for starships. However, these applications may be a considerable way into the future. We can alter the structure of the vacuum locally in the Casimir effect in which the speed of light perpendicular to the plates increases. This effect, however, is small because only a limited number of waves at the zero point field are excluded from between the plates. Haisch, Rueda, and Puthoff are in fact examining a variety of possibilities with NASA in these very areas for spaceship propulsion. More locally, I envisage a time when it may be possible to change the characteristics of the vacuum in a large localised container in which radioactive waste may be immersed. As a consequence, the half-lives would be shortened and the decay processes speeded up, but there would be no more energy given off in that container than if the material was decaying at the normal rate outside. The energy density would be remaining constant. What happens in this kind of thing is that the energy density is determined by the permittivity and permeability of free space. When the speed of light is high, the permittivity and permeability are low, proportional to 1/c. As a consequence, the energy density of emitted wavelengths is also proportional to 1/c. However, because radioactive decay effectively has a 'c' term in the numerator for each of the decay sequences, this means that there will be c times as much radiation emitted. However, because the energy density of the radiation is only 1/c times as great, the effective energy density remains constant. Under these circumstances, some practical applications of this may be possible in the forseeable future, but a lot of development would still have to occur.

Setterfield: Regarding the spectral lines of hydrogen question: this is a common statement by those who have not looked in any detail at the cDK hypothesis. It shows a complete misunderstanding of what is happening. When the speed of light is higher, there is no change in the wavelength of emitted radiation. So the wavelengths of hydrogen and its spectral lines will be unchanged by the changing speed of light. What does change is the *frequency* of light in transit, because light speed equals wavelength times frequency. Because wavelength is constant, frequency changes lockstep with the speed of light. Therefore, as light speed changes in transit, the frequency automatically changes, so that when light arrives at the observer on earth, no difference in frequency of a specific wavelength will be noted compared with laboratory standards. At the moment of emission, the wavelength of a given spectral light is the same as our laboratory standard, but the frequency, which is the number of waves passing per second, will be higher proportional to c. In transit those wavelengths remain constant, but as lightspeed decays, the frequency drops accordingly, so that, at the moment of reception, the frequency of light from any given spectral line will be the same as the observer's standard. The wavelength will also be unchanged. One point should be re-emphasised in view of current scientific convention. Convention places emphasis on frequency rather than wavelength, but frequency is a derived quantity, whereas wavelength is basic. So if you have a constant wavelength at emission, and that wave train is traveling faster, you will inevitably have a higher frequency.

A Series of Questions from One Correspondent

Setterfield: I have assumed that at the very beginning of the cosmos it was in a small, hot dense state for two reasons. First, there is the observational evidence from the microwave background. Second there is the repeated testimony of Scripture that the lord formed the heavens and "stretched them out." I have not personally stipulated that this stretching out was completed by day 2 of Creation week. That has come from Lambert Dolphin's interpretation of events. What we can say is that it was complete by the end of the 6th day. It may well have been that the stretching out process was completed by the end of the first day from a variety of considerations. I have yet to do further thinking on that. Notice that any such stretching out would have been an adiabatic process, so the larger the cosmos was stretched, the cooler it became. We know the final temperature of space, around 2.7 degrees Absolute, so if it has been stretched out as the scripture states, then it must have been small and hot, and therefore dense initially, as all the material in the cosmos was confined in that initial hot ball.

Setterfield: Please distinguish here between what was happening to the material within the cosmos, and the very fabric of the cosmos itself, the structure of space. The expansion cooled the material enclosed within the vacuum allowing the formation of stars and galaxies. By contrast, the fabric of space was being stretched. This stretching gave rise to a tension, or stress within the fabric of space itself, just like a rubber band that has been expanded. This stress is a form of potential energy. Over the complete time since creation until recently, that stress or tension has exponentially changed its form into the zero-point energy (ZPE). The ZPE manifests itself as a special type of radiation, the zero-point radiation (ZPR) which is comprised of electromagnetic fields, the zero-point-fields (ZPF). These fields give space its unique character.

Setterfield: Yes! As more tensional energy (potential energy) became exponentially converted into the ZPE (a form of kinetic energy), the permittivity and permeability of the vacuum of space increased, and light-speed dropped accordingly.

Setterfield: Yes! It has been shown by Harold Puthoff in 1987 that it is the ZPE which maintains the particles in their orbits in every atom in the cosmos. When there was more ZPE, or the energy density of the ZPF became higher, each and Every atomic orbit took up a higher energy level. Each orbit radius remained fixed, but the energy of each orbit was proportionally greater. Light emitted from all atomic processes was therefore more energetic, or bluer. This process happened in jumps as atomic processes are quantised.

Setterfield: Yes! The stars within our own milky way galaxy will not exhibit any quantum redshift changes. The first change will be at the distance of the Magellanic clouds. However, even out as far as the Andromeda nebula (part of our local group of galaxies and 2 million light-years away) the quantum redshift is small compared with the actual Doppler shift of their motion, And so will be difficult to observe.

Setterfield: That is correct! Because these distant galaxies are so far away their emitted light is taking a long time to reach us. This light was therefore emitted when atoms were not so energetic and so is redder than now. Essentially we are looking back in time as we look out to the distant galaxies, and the further we look back in time, the redder was the emitted Light. The light from the most distant objects comes from about 20 billion light-years away. This light has reached us in 7,700 years. The light initially traveled very fast, something like 1010 times its current speed, But has been slowing in transit as the energy density (and hence the permittivity and permeability) of space has increased.

Setterfield: Yes! There is an answer. When the speed of light was about 1010 times its current speed as it was initially, observers on earth could see objects 76,000 light years away by the end of the first day of creation week. That is about the diameter of our galaxy. Therefore the intense burst of light from the centre of our galaxy could be seen half way through the first day of creation week. This intense burst of light came from the Quasar-like processes that occurred in the centre of every galaxy initially. Every galaxy had ultra-brilliant, hyperactive centres; ours was no exception. After one month light from galaxies 2.3 million light years Away would be visible from earth. This is the approximate radius of our local group of galaxies. So the Andromeda spiral galaxy would be visible by then. After one year, objects 27 million light-years away would be visible if telescopes were employed. We now can see to very great distances. However, since we do not know the exact size of the cosmos, we do not know if we can see right back to the "beginning."

Setterfield: Absolutely correct!

Setterfield: The highly energetic beginning of the universe was referring to the contents of the cosmos, the fiery ball of matter-energy that was the raw material that God made out of nothing, that gave rise to stars and galaxies as it cooled by the stretching out process. By contrast, the ZPE was low, Because the tensional energy in the fabric of space had not changed its form into the ZPE. A distinction must be made here between the condition of the contents of the cosmos and the situation with regards to the fabric of space itself. Two different things are being discussed. Note that at the beginning the energy in the fabric of space was potential energy from the stretching out process. This started to change its form into radiation (the ZPE) in an exponential fashion. As the tensional potential energy changed its form, the ZPE increased.

Setterfield: First, the matter of the Higgs boson. Although it has been searched for, there is as yet no hard experimental evidence for its existence - we are still waiting for that. As for its role in mass through interaction, I strongly suggest that you read an important article entitled "Mass Medium" by Marcus Chown in New Scientist for 3rd Feb. 2001, pp.22-25. There it is pointed out that, even if the Higgs is proven to exist, it may not be the answer we seek. Instead, an alternative line of enquiry is opening up in a very positive way. This line of enquiry traces its roots to Planck/Einstein/Nernst and can reproduce the results of quantum physics through the effects of the Zero-point Energy (ZPE) of the vacuum. Those following this line of study can account for mass through the ineraction of the ZPE with massless point charges (as in quarks). Indeed, gravitational, inertial and rest-mass can all be shown to have their origin in the effects of the ZPE. This mass comes from the "jiggling" of massless particles as the electromagnetic waves of the ZPE impact upon the particles. This links back to another query that you had, namely the reason for the speed of light in the vacuum. This speed, c, is related to the ZPE through the manifestation of virtual particle pairs in the paths of photons. As photons travel through the vacuum, there is a continual process of absorption of the photon by virtual particles, followed very shortly after by its re-emission as the virtual particle pairs annihilate. This process, while fast, does take a finite time to accomplish. Its akin to a runner going over hurdles. Between hurdles the runner maintains his maximum speed, but the hurdles impede progress. The more hurdles over a set distance, the longer it takes to complete the course. This is essentially the reason for the slowing of light in glass, or water etc. Atoms absorb photons, become excited, and then re-emit the photons of light. The denser the substance, the slower light travels. Importantly, the strength of the ZPE governs the number of virtual particles in the paths of photons. It has been shown that when the energy density of the ZPE is decreased, (as in the Casimir effect where the energy density of the vacuum is reduced between two parallel metal plates), then lightspeed will be faster. The reason is that there are fewer virtual particles per unit length for light photons to interact with. It has been shown that this process can account for the electric permittivity and magnetic permeability of the vacuum. A summary of some of this can be found in an article by S. Barnett in Nature, Vol.344 (1990) p.289. A more comprehensive study by Latorre et al in Nuclear Physics B Vol.437 (1995), p.60-82 stated in conclusion that "Whether photons move faster or slower than c [the current speed of light] depends only on the lower or higher energy density of the modified vacuum respectively". Thus a vacuum with a lower energy density for the ZPE will result in a higher speed of light than a vacuum where the energy density of the ZPE is higher. On the matter of tachyons, an important item is currently being considered. Just as virtual particles exist with the ZPE, so also do virtual tachyons. A recent study has shown that the Cherenkov radiation from these virtual tachyons can account accurately for the microwave background [T. Musha, Journal of Theoretics, June/July 2001 Vol.3:3]. Your final question relates to the increase in mass with increase in speed of particles. Again the reason can be found in the ZPE. As mentioned in the New Scientist article referred to above, radiation from the ZPE essentially bounces of the accelerating charge and exerts a pressure which we call inertia. The faster the movement, the greater the pressure from the ZPE, and so the greater the inertial mass. This has been demonstrated in a mathematically rigorous way in peer-reviewed journals and a consistent theory is emerging from these studies. I hope this is of assistance. If you have further questions, please do not hesitate to get back to me.

Setterfield: Your first question is in fact two-fold – it concerns the behaviour of a light beam transmitted by atoms in a transparent medium compared with the behaviour of light transmitted through the vacuum that includes virtual particles. In the first instance you state “that when an atom absorbs a photon and then re-radiates it, the direction in which the photon is re-radiated is not necessarily the same as the direction of the original photon.” My response to this follows the approach adopted by Jenkins and White, Fundamentals of Optics third edition section 23.10 entitled ‘Theory of Dispersion’, pp.482 ff. The initial point that they make is that as any electromagnetic wave traverses the empty space between molecules, it will have the velocity that it possesses in free space. But your question effectively asks something slightly different, namely, how is it possible for the light wave to be propagated in substantially the same direction as it was initially traveling in? You point out that atoms effectively scatter and/or re-emit the incoming photons in any direction. But here Jenkins and White make an important point. They demonstrate that “the scattered wavelets traveling out laterally from the [original] beam [direction] have their phases so arranged that there is practically complete destructive interference. But the secondary waves traveling in the same direction as the original beam do not thus cancel out but combine to form sets of waves moving parallel to the original waves” (their emphasis). I think that this deals with the first aspect of your question. The second aspect of the question essentially asks why the original direction of a light photon is maintained after it has been absorbed by a virtual particle pair and re-emitted upon their annihilation. In other words, why does the emitted photon travel in the same direction as the original photon? In order to easily visualize why, it may be helpful to look at this in a slightly different way which is still nonetheless correct. One may consider a traveling photon to be briefly transformed into a virtual particle pair of the same energy as the original photon. Furthermore, the dynamics of the situation dictate that this particle pair will move forward in the original photon’s direction of travel. However, the virtual particle pair will travel a distance of less than one photon wavelength before they annihilate to create a new photon with characteristics indistinguishable from the old one. This whole process complicates the smooth passage of photons of all energies through space by making the photon travel more slowly. This is explicable since on this approach the photon spends a fraction of its existence as a virtual particle pair which can only travel in the direction of the original photon’s motion at sub-light velocities. Implicit in this whole explanation is the fact that both virtual particles and the new light photon must all move in the same direction as the original photon, which then answers your question. This explanation also impinges on your final question. This effectively asks why photons of all energies are uniformly affected by the process of absorption by the virtual particles, which thereby ensures that photons of all energies (or wavelengths) travel at the same velocity. If the alternative explanation given above is followed through, the original photon transforms into a virtual particle pair, which then annihilate to give the original photon. On this basis, it immediately becomes apparent that the energy (and wavelength) of the virtual particle pair results directly from the energy of the original photon, and this energy is then conserved in all subsequent interactions. I trust that this answers your questions. If you have further issues, please do not hesitate to get back to me.

Setterfield: If the oscillation is entirely mechanical (piezo-electric effect), then it will not be affected by any changes in the ZPE. There are, however, some elements which are mechanical, and others which seem to be atomic (Is the behavior of the molecules themselves atomic or mechanical in this case?). However, if we go with the observations, the measurements done on the speed of light using quartz oscillator clocks did in fact show a decrease in the speed of light over time. This contrasts with atomic clocks which have run rates in synch with the speed of light, and therefore cannot show c as changing. Because the quartz clocks are showing the change, this seems to indicate that they are not affected – or as affected (?) – by a changing ZPE. If they were affected by the ZPE, they would show no change in the speed of light at all. Quartz oscillator clocks came in around 1949-1950. The speed of light had, at this point, already been declared constant by Birge; however it was not until the atomic clock measurements of the early eighties that the speed of light was ‘officially’ declared constant. So there were some measurements taken after Birge, and the decay was showing up as continuing. This was measured using those quartz clocks, so the observational evidence we have from this indicates that the ZPE changes are not affecting them. This needs more research, obviously, but these are my current feelings about the matter.

Calculating atomic vs. orbital time

Setterfield: Thanks for the question. The response is YES! there is a formula for calculating orbital years from atomic years. The formula and actual examples of how it is used can be found in our web article "Data & Creation: The ZPE - Plasma Model." If you go to equations (14) to (20) you will see how to do it. On the other hand, if the math is a problem, here is a brief synopsis: 5680 BC = Creation -12.3 billion atomic years If you need further help after reading the article, please get back to me.

Global Warming and the "Carbon Threat"

Setterfield: I refer you to the work of Henrik Svensmark, head of the Center for Sun-Climate Research at the Danish National Space Center, and his co-workers. It starts with the cosmic ray flux from outside our solar system due to supernova explosions and other interstellar processes. When these cosmic rays reach the lower atmosphere, they ionize the air, releasing electrons that aid in the formation of cloud condensation nuclei. When the cosmic ray flux is high, so, too, is cloud formation and this reflects more solar energy into space, cooling the planet. The next important link comes from Solar activity. When the Sun is active, the solar wind (and its associated electric and magnetic fields), deflect the cosmic rays around the earth and prevent them from entering our atmosphere. Thus cloud-formation is reduced, less solar radiation is reflected back into space, and the planet inevitably heats up. So global warming occurs when the sun becomes more active. Importantly, we find Mars and other planets are also heating up at the same time as earth. The next important clue is something with which most of us are familiar. When you heat up a soda pop bottle, the carbon dioxide comes out of solution. As the earth heats up, so, too, do the oceans. They then give up a portion of their dissolved carbon dioxide which goes into the atmosphere and boosts the CO2 contents there. So it is not human activity that is boosting the carbon content, but solar activity. But we can go further. This warming increases the temperature gradient between the equator and the poles. This means that the mechanism that drives our atmospheric processes is more energetic. Consequently, we get greater extremes in our weather, while the average position only changes marginally. Therefore storms are becoming more violent. About five years ago, New Scienist reported that the average height of storm waves in the Atlantic had increased by 10% since the 1960's and oil rigs etc. were having to take this into account. This calls to mind the comment in Luke 21:25 that the sea and the waves would be roaring just before the Lord's Return. This then links with the statement in Revelation 16:8 that indicates the sun would become hotter and Isaiah 30:26 which also states that the moon would become brighter. From these references, it would seem possible that we are at the beginning of something which is potentially important. However, our attempts at regulating the carbon balance may not make any significant difference. an additional note: Just a brief note on another aspect of the Carbon debate. It all began with an Oceanographer named Roger Revelle who became the Director of the Scripps Oceanographic Institute in La Jolla, San Diego. Among others he hired Hans Suess, a noted chemist from the University of Chicago, and co-authored a paper with him in 1957. That paper raised the possibility that carbon dioxide might be creating a greenhouse effect and causing atmospheric warming, and resulted from Suess's interest in carbon in the environment from fossil fuels. Basically, the paper was a reason to obtain more funding for carbon research. Revelle then hired a Geochemist named David Keeling to devise a method to measure the atmospheric carbon dioxide content. In 1960 Keeling published his first paper which showed an increase in atmospheric carbon dioxide and linked it with burning of fossil fuels. These two papers became the foundation of the science on global warming, even though they offered no proof that carbon dioxide was a greenhouse gas. Nor did they explain how 300 to 400 parts per million of this gas in our atmosphere could have any significant effect on temperatures. Several hypotheses emerged in the 1970's and 1980's on how this 40 hundredths of one percent might cause significant warming, and as a consequence, the money and environmental claims kept building up. There is another link in this chain. Back in the 1960's this research came to the attention of Canadian born UN bureaucrat named Maurice Strong. Strong was interested in achieving world government through the UN, and was interested in any issue that could further that aim. The research on global warming through carbon dioxide caught his interest. He organized a World Earth Day event in Stockholm, Sweden in 1970, and developed a committee of scientists, environmentalists and political operatives from the UN to continue a series of such meetings. Strong then developed the concept that the UN could demand payment from the advanced nations for climate change damage from their burning of fossil fuels to benefit underdeveloped nations; in other words, a carbon tax. In order to do this, he needed stronger scientific evidence, so he championed the establishment of the UN Intergovernmental Panel on Climate Change. All those in this diverse group obtained UN funding to produce the science needed to prove that the carbon dioxide problem required that the burning of fossil fuels should be stopped. Over the past 25 years they have been very effective. Hundreds of scientific papers, four major international meetings, and untold news stories about a climatic Armageddon, as well as a Nobel Prize shared with Al Gore have all emminated from this UN IPCC. However, the person who started this whole scenario, Roger Revelle, co-authored a paper in 1991 with Chauncey Starr of the Electric Power Institute, and Fred Singer of the US Weather Satellite Service in the journal "Cosmos." They urged more research and begged scientists and governments not to move too fast to curb carbon dioxide emissions because the true impact of CO2 was not at all certain. While Al Gore has dismissed Revelle's about turn as the actions of a senile old man, Dr Singer noted that Revelle was considerably more certain that caution was justified than he was of the original proposal [John Coleman: The Amazing Story behind the Global Warming Scam]. More questions on climate change, November, 2019 It is rare for the full story of what is going on with climate change to be published, all because of political correctness in the scientific field. The culprit is not human activity, but the sun. Let me explain what was printed in the serious science Journals, not just those trying to be politically correct and preserve funding options: The Sun’s magnetic fields and winds keep cosmic rays away from the solar system, particularly the inner solar system. Cosmic rays are mainly nuclei of hydrogen and helium, with some nuclei from other elements as well. Cosmic rays are thus charged particles. The majority of cosmic rays originate from our own and other galaxies from a variety of processes we need not go into here. Since moving charged particles like this are deviated in a magnetic field, cosmic rays are deviated away from the solar system by the magnetic field of the sun. However, when the sun’s activity weakens, its magnetic field weakens also, and cosmic rays move in and bombard the earth and other planets. When cosmic rays smash into our atmosphere, they produce a shower of particles and ions. These particles have been proven in the lab and in our atmosphere to act as nuclei that seed clouds; more particles, more clouds. This cloudiness shields the earth from the sun, and the earth cools. A cosmic ray influx is thus a cooling phenomenon. So, a quiet sun is less active; its magnetic field lessens; cosmic rays pour in, & the Earth cools down. An active sun gives a hotter earth. So, the Medieval Warm Period near year 1000 AD & the cold period in the Little Ice Age 1300-1900 AD fit well with changes in Solar activity. Despite this evidence, there was a missing piece to the puzzle, namely why earth’s temperatures were controlled by the sun. That missing piece has now been found to be the cosmic rays. This link has recently been published in the scientific literature by a team of Russian physicists led by Y. I. Stozhkov and (primarily) Henrik Svensmark’s team from Denmark. Good summaries can be found here and here. These and other data show that the sun’s activity has increased since the 1800’s, even though it is modulated by the 11-year (or, more correctly, the 22 year) cycle. We are currently going through a prolonged minimum on that cycle which is causing the overall warming trend to be moderated. A paper by John Eddy to the International Symposium on Solar-Terrestrial Physics in 1976 and a number of other papers since 1943 confirm this. A rise of ¼ % in the sun’s energy output from 1900-1950 has been documented in Eddy’s paper. So the sun is heating up, but going through a quiet phase at the moment as part of its 11-year cycle. There is one additional piece of information that explains what is happening on earth itself. When the sun’s activity is greater, the band of ocean water around the equator (between the tropics of Cancer and Capricorn) gets warmer. This has two effects. First, the ocean water gives off huge quantities of dissolved carbon dioxide like a soda pop bottle in the sun. The CO2 content of our atmosphere thus rises significantly. This is not caused by human activity. Second, it means the temperature gradient from the tropics to the poles has increased as ice at the poles maintains a relatively stable temperature near zero Celsius. A higher temperature gradient means that atmospheric processes are more violent or extreme; storms & hurricanes etc. are more violent; and temperature extremes are greater. It is all due to the sun. One final point. From Isaiah 30:26 and Revelation 16:8-9, this present heating up of the sun may be just a prelude to a much more serious increase in solar activity. Jesus warned us that “there would be signs in the sun, moon and stars” Luke 21:25, and this is something that will really grab attention of the people on earth. Not only would the sun and moon be affected, but the planets would be correspondingly brighter. Revelation 16:15 may be considered in this context. This current brightening of the planets had been noted as being about 2% for Uranus and Neptune (which were being studied explicitly) in the period 1965 to 1971 when the report was issued in New Scientist (Vol. 52:146, 1971). Other planets were also reported brightening. So these events suggest that we may be living in Pre-Tribulation days.

What About Gigantism in the Fossil Record?

Setterfield: These rats in Timor were large by comparison with today's usual varieties. That is one thing. However, there are a series of fossils types throughout the geological column whose giantism (not just abnormally large sizes) poses some very difficult problems. For example, dragonflies in the Paleozoic Era, about 300 million atomic years ago, had wingspans of about 3 feet or so with the rest of their bodies proportionally larger. The scorpions in the Paleozoic, the eurypterids, attained sizes of the order of 8 feet long. One magnificent specimen used to stand at the entrance to the Museum in Adelaide South Australia. It was guaranteed to impress, if not unnerve, the first-time visitor. We are all familiar with the largest land animals th at ever lived, namely the dinosaurs. But there is an ongoing debate in geological/paleonto logical circles as to how such magnificent beasts could function. The problem is that, at face value, nerve impulses from the tail of, say, Diplodicus, would take of the order of 2 to 3 seconds to get the message to his brain. This gives an unrealistic response time to any problem the dinosaur had back there. Although a number of solutions have been suggested, none has taken the geological community by storm, and they are not viable explanations for the large variety of similar problems elsewhere in the geological column. But there is, potentially at least, a common answer to all these puzzles. The answer lies in the fact that the strength of the Zero Point Energy (ZPE) was lower back in the past. This means that the electric and magnetic properties of the vacuum were different. In addition, electric currents were stronger, voltages were higher, and electrons and ions moved faster. This has implications for plasma physics and the EU (Electric Universe) approach, since it may well provide an explanation as to why the ancients saw things in the heavens which we do not today. Be that as it may, the point that immediately grabs our attention is that the nervous systems of all animals, and many of their bodily functions, are electrical in nature. Nerves conduct electrical impulses. Bodily functions are often performed through ion transfer which deals with electrical charges. Thus nerve impulses traveled more swiftly and more efficiently when the ZPE was lower, and any bodily function involving ion transfer would also benefit. This would make the heart, brain and other organs much more efficient systems than we have today. The limiting size for insects such as the dragonfly and the eurypterids is largely determined by the nervous system. They shed their exoskeletons periodically and form new ones which accomodate their growth. But that growth is limited by the efficiency of their electrical systems. So today, they are small; but back then, when the efficiency was significantly higher, their bodies were correpondingly larger. It is also true that insect sizes today are limited by the amount of oxygen they are able to take in through the spiracles in the sides of their abdomens and then diffuse through their bodies by their multiple tracheae. But research has shown that the tracheae themselves will enlarge and branch out to accomodate a larger size if other factors allow this. The prime factor is the limitation imposed by the nerve impulse system. When that is more efficient, larger tracheae will not be a problem. As for the dinosaurs, when the ZPE strength was lower, they could get a message from tail to brain in times approximating to 1/10 of a second. The conclusion is that the EU proposal involves far more than just plasma physics; it involves life itself. And when the additional factor of the lower ZPE in the past is factored in, a whole host of geological problems are also answered. 2012 Note: The published article Zero Point Energy and Gigantism in Fossils is highly relevant here.

Is the sun responsible for changes in radio decay rates?

Setterfield: Many thanks for the links; they are appreciated, even though I do already have them. Oftentimes it is someone like you who alerts me to something happening that I need the information about. Strictly speaking, it is not the sun which is affecting the rates of radioactive decay. You will note that the earthbound radioactive isotopes were affected 30 hours before the flares came from the sun. What is happening is that some electromagnetic pulse or some similar phenomenon is being propagated from the outer solar system towards the sun and passes the earth before it hits the sun and causes a flare. If the effect causing the flare came from within the sun, it would be propagated to the sun's surface before it got to the earth. So it is not some strange particle that is causing the effect as some suggest, but rather something that has affected the electric and/or magnetic properties of the vacuum. Since electron streams have already been detected coming from the outer solar system into the sun, it is likely that something similar may be the cause of the anomaly. Of course, an electron stream constitutes an electric current, and such currents in space can be of the order of a million amperes. This would locally affect the vacuum because of polarization of virtual particles and thereby change the sttrength of the ZPE locally which in turn affects the rate of ticking of atomic clocks. The electron flux would bring about a higher concentration of virtual particles through polarization and hence locally enhance the strength of the ZPE. This in turn would slow atomic clocks. As for the 33 day cycle, you are probably on the right track in stating that it is some external influence driving the sun rather than the other way around. In plasma physics the rate of spin of the sun is also dependent upon the strength of the electric current driving the spin. If that current is modulated at a period of 33 days that explains the sun's rotation and also the effects we see in the lab. Plasma physics with electric and magnetic effects applied to astronomy, is only just beginning to explore these possibilities. So many thanks for your insights which have got me thinking further along these lines. March 2, 2012 note: Further research regarding this question has resulted in a much more definitive answer which can be found here.

Setterfield: As far as time and entropy are concerned, there may be some common, but incorrect thinking on this matter. Time is measured, simply, by the movement of mass through space. We live in a time/space/mass continuum, as Genesis 1:1 indicates -- all of which was created by God from nothing. Entropy, on the other hand, is defined as increasing disorganization. This implies everything was organized to begin with, but, as Romans 8 tells us, is now "in bondage to decay." So time started at the beginning, but entropy would have been the result of the fall of man. As far as God, Satan, Eternity etc are concerned, we tend to think of God as inhabiting eternity. However, in Genesis 1:1 we find the words "In the Beginning, God created the heavens and the earth. So we have the calling into being out of nothing, Time (beginning), Space (the heavens) and Matter (the earth). We also throughout the Scriptures have 3 heavens mentioned, the birds of heaven (our atmosphere) the stars of heaven (outer space) and the Heaven of heavens (God's Throne). Therefore, God's throne exists as part of the present created order, and so is dependent upon time. Note that the heavens and all the host of them (the stars and the angels) were proclaimed "very Good" at the end of Creation Week. Therefore the angels themselves are time-dependent creatures like us and are limited to this time-space continuum, even though we do not know the exact location of the third heaven or God's Throne. So Eternity as a concept as being something distinct from time may also be incorrect. Indeed, in Ephesians 2:7 we are told that when we are seated with Christ in the New Heavens God will show us His grace and kindness throughout "the ages to come". This implies that there is some measure of time involved, and therefore our concept of Eternity as distinct from time may need to be adjusted. The Tree of Life gives its fruit in season. There is a river flowing from under the Throne. These things indicate the movement of mass through space. So there is some sort of 'time.' It just may not be as we understand it now. Bradley's Measurements Questioned

Setterfield: Thank you for your comments and questions. As far as the calculation of the speed of light by Bradley and his method are concerned, some comments are important. While it is true that the sun and solar system are moving through space at a definite speed and in a definite direction, that does not alter the validity of Bradley's results or those from that method. The reason is that what is being measured is the size of the long axis of the ellipse traced by stars annually. The size of the ellipse is governed by the difference between the earth's orbital motion in one part of the year compared with 6 months later when it is moving in the opposite direction. This difference is independent of the speed of the solar system. It is this difference in the earth's velocity that is being measured compared with the (essentially constant) speed of light over that same period. This difference in direction of motion causes a star to trace out an ellipse, while the actual velocity difference governs the size of the long axis of the ellipse. I trust this helps. The Large Hadron Collider

Setterfield: It is certainly true that there is a lot of hype associated with events in Europe with the Large Hadron Collider. It has been stated that it has confirmed the existence of the Higgs boson. But this is only a probability thing. The particle in question would have existed for an infinitesimally short time and then decayed to photons of energy. All that has been picked up are photons with the likely energy among a whole field of others. The whole issue depends on the science of mathematical probability; in this case the probability of finding photons with the right energy from other processes is the key. Some scientists want a higher probability that something actually caused those specific photons before being convinced. As a result, they believe there may be reason to doubt this much publicized discovery. It is this probability process which is applied to the mass of data to tease out items of potential significance that result in any "discovery". There has recently been an upgrade to allow it to operate at even higher energies. This has been done with the hope that it may reproduce some of the conditions which were expected to exist at the moment of the inception of the cosmos. Whether or not this is achievable is a matter of debate. Even if this is not achieved, it is hoped that some strange particles might emerge from the collision process. If the right ones can be found, it might give a clue as to whether or not so-called "string theory" and its proposed extra dimensions can be backed up by experimental data. Up to this moment, that has not happened. It currently looks like an elegant mathematical theory without any hard physical evidence to support it. But some of those involved with string theory claim that its mathematical elegance is sufficient proof of its validity! There has also been some loose talk about the machine being capable of generating miniature black holes. This is very much to be doubted. So, unless the report you read actually comes from a reputable science organization or a recognized university of similar college, I would generally ignore it, with the possible exception given below.. You ask for a link. The first one below is to the CERN facility itself which would announce genuine discoveries or at least possible discoveries. The second is to the BBC Science page which also includes a lot of environmental stuff. But I would expect any important development to be announced there as well. But there is the likelihood of some scientific speculation as well , so just read carefully! I hope that is a help.