Data

The data set used in the 1987 Report Note June 2, 2003 Indications from the data

The data set used in the 1987 Report From Lambert Dolphin regarding the statistical analysis of Atomic Constants, Light, and Time When Alan Montgomery and I decided to look at the measurements of c back in 1992 we gathered each and every published measurement from all known sources. This gave us a master list to start with. We could not find any additional published data points or we would have added them to this master list. The master list includes (a) published original actual measurements,(b) "reworkings" of many of these original data points by later investigators, (c) subsequent published values of c which are not new data but actually merely quoted from an original source. (For instance a value published in Encyclopedia Britannica is probably not an original data point). Naturally we needed to work with a unique data set which includes each valid measurement only once. In some cases we had to decide whether the original measurement or a later reworking of a given measurement was to be preferred. In the data set a couple of data points were so far out of line with any other nearby measurements that they are easily classified as outliers. What was humorous to Alan and me is that if we take all the data from the master list, that is, all the raw data from all sources, including spurious points, outliers, duplicates and do a statistical analysis of THAT (flawed) data set the statistical result is STILL non-constant c. (Alan and I did this for fun when we were selecting our "best data" set). We did our calculations on an Excel spread sheet with embedded formulas so we could easily generate various subsets of the data. What we found was that our conclusion of non-constant c was still implied. In other words we tried everything we could think of to prove ourselves wrong, hence our continuing desire to receive valid criticisms of our statistical methods. If anyone has additional c data points that are not on our master list, let us know. Our complete data sets are available: http://ldolphin.org/cdata.html *******

*******

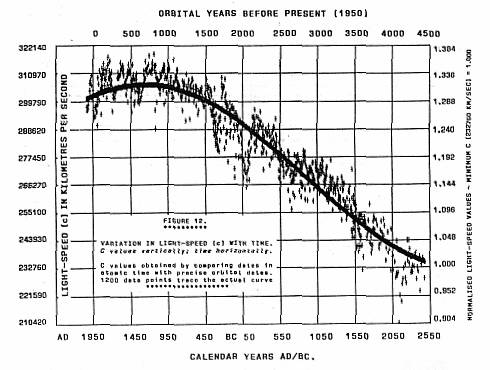

Indications from the data concerning lightspeedSetterfield: about 1000 AD, roughly. It was not very high. At most, it was about 320,000 km/s or so compared with 299,792 km/s now. There is geological and astronomical evidence that there is an oscillation. The cause of the oscillation may be a slight vibration of the cosmos about its mean position after the end of Creation Week.

Setterfield: As the Vc (variable light-speed) theory is currently formulated (I am working on some changes that may be relevant to this discussion), your assessment of the situation is basically correct. You ask if there is any evidence to show that 'c' has changed since measurements began. Indeed, I believe there is. The 1987 Report canvasses that whole issue along with all the measured data of light speed, Planck's constant and other physical quantities which are associated by theory, being tabulated and treated statistically. The data provide at least formal statistical evidence for a change in c. The error-bars are graphed and can be viewed in the 1987 Report. It is fruitful to look at Figure 1 where the c values by the aberration method from Pulkova Observatory are plotted. Here the same equipment was used for over a century. Importantly, the errors are larger than for most methods, but the drop in c far exceeds the errors. Also tabulated in the Report are the values of c by each method individually, and each of these also displays a drop with time. Again, when the same equipment was used on a second occasion, a lower value was always obtained at the later date. You ask about increased radiation doses. Appendix 2 of Behavior of the Zero Point Energy and Atomic Constants indicates that the energy-density of radiation was lower in the past. As a consequence, even though radioactive decay for example occurred more quickly, the higher decay rate had no more deleterious effects than the lower decay rate today. One area of study that is currently being pursued is the effect on pleochroic haloes. While the radii of the haloes will remain constant, it appears that the higher decay rate may give rise to over-exposed haloes. One might expect a systematic tendency for them to be found in that condition in the oldest rocks in the geological column. However, annealing by heat can eliminate this effect, even with temperatures as low as 300° C.

Setterfield: No, I am not saying c is no longer decaying, but the exponential decay has an oscillation superimposed upon it. The oscillation only became prominent as the exponential declined. The oscillation appears to have bottomed out about 1980 or thereabouts. If that is the case (and we need more data to determine this exactly), then light-speed should be starting to increase again. The first minimum value for light-speed was about 0.6 times its present value. This occurred about 2700 - 2800 BC. This is as close to ‘zero’ as it came. The natural oscillation brought it back up to a peak that had a maximum value of about 310,000 -320,000 km/sec. This was in the neighborhood of about 1000 AD. Today the value is 299,792.458 km/sec. On the graphs, today’s value is the base line. This does not equal either zero itself or a change of zero. It is just the base line.

The following question has to do with this graph.

Setterfield: It should be pointed out that the graph was developed by a comparison of radiometric dates compared with historical dates. The discrepancy between the two dates is largely due to the behaviour of lightspeed since that is the determining factor in the behaviour of all radiometric clocks. It will be noted that the data points show a scatter which is largely due to the 11 to 22 year solar cycle which affects the carbon 14 content in our atmosphere. The problem as stated in the above question results from a misreading of the graph. If you look carefully, you will note that the years AD are on the left, while the years BC are on the right. In other words, reading the graph from left to right takes us backwards in time. If you notice, the graph on the left hand end finishes at today’s value for c. There is probably also some confusion due to the fact that there is an oscillation in the behaviour of c that is even picked up in the redshift and geological measurements. This oscillation is on top of the general decay pattern. What this graph does is to show that oscillation in detail over the last 4000 years. And yes, the speed of light was lower than its current value in BC times because of that oscillation. However, by about 2700 BC it was climbing above the present value. It is because of this oscillation that carbon 14 dates do not coincide with historical dates.The reason for the oscillation is that a static universe in which the mass of atomic particles is increasing is stable, but will gently oscillate. This has been shown to be the case by Narliker and Arp in Astrophysical Journal Vol. 405 (1993), p.51. As you know, the quantized redshift evidence suggests that the universe is static and not expanding, but evidence of the oscillation is also there. In terms of the Zero Point Energy (ZPE) this oscillation effectively increases the energy density of the ZPE when the universe has its minimum size since the same amount of Zero Point Energy radiation is now contained in a smaller volume. The result is that lightspeed will be lower. Conversely, the energy density of the ZPE is less when the universe has its maximum diameter and the speed of light will be higher as a result. I trust that this clears up the confusion.

for Statistician Alan Montgomery: I have yet to read a refutation of Aardsma's weighted uncertainties analysis in a peer reviewed Creationary journal. He came to the conclusion that the speed of light has been a constant. --A Bible college science Professor.Reply from Alan Montgomery: The correspondent has commented that nobody has refuted Dr. Aardsma work in the ICR Impact article. In Aardsma's work he took 163 data from Barry Setterfield's monograph of 1987 and put a weighted regression line through the data. He found that the rate of decrease was negative but the deviation from zero was only about one standard deviation. This would normally not be regarded as significant enough to draw a statistical conclusion. In my 1994 ICC paper I demonstrated among other things the foolishness of using all the data--those methods with and without sensitivity to the data to the question. You cannot use a ruler to measure the size of a bacteria. Second, I demonstrated that 92 of the data he used were not corrected to in vacuo and therefore his data was a bad mixture. One cannot draw firm conclusions from such a statistical test. I must point out to the uninitiated in statistical studies that there is a difference between a regression line and a regression model. A regression model attempts to provide a viable statistical estimate of the function which the data exhibits. The requirements of a model are that it must be: (1) a minimum variance (condition met by a regression line); (2) homoskedastic - data are of the same variance (condition met by a weighted linear regression) and (3) it must not be autocorrelated - the residuals must not leave a non-random pattern . My paper thus went a step further in identifying a proper statistical representation of the data. If I did not point it out in my paper, I will point it out here. Aardsma's weighted regression line was autocorrelated and thus shows that the first two conditions and the data imposed a result which is undesirable if one is trying to mimic the data with a function. The data is not evenly distributed and the weights are not evenly distributed. These biases are such that the final 11 data determine the line almost completely. This being so caution must be exercised in interpreting the results. Considering the bias in the weights and their small size, data with any significant deviation from them should not be used. It adds a great deal of variance to the line yet never adds any contribution to its trend. In other words, the highly precise data determines the direction and size of the slope and the very low imprecision data makes any result statistically insignificant. Aardsma's results are not so much wrong as unreliable for interpretation. The Professor may draw whatever conclusions he likes about Aardsma's work but those who disagree with the hypothesis of decreasing c have rarely mentioned his work since. I believe for good reason. ( October 14, 1999.) ******* From Lambert Dolphin: I want to fill in some regarding the fateful year of 1987: I had known of Barry's work for several years when he and Trevor and I decided to publish a joint informal report in 1987. As a physics graduate student at Stanford in the mid '50s I was aware of the historic discussions about the constancy of c and other constants. Across the Bay, at UC Berkeley, in our rival physics lab Raymond T. Birge was at that time well known and respected. I knew he had examined the measured values of c some few years before and decided the evidence for a trend was then inconclusive. I also knew there of nothing in physics that required c to be a fixed constant. Therefore the Setterfield and Norman investigation of all the available published data seemed to be a most worthy undertaking. I was a Senior Research Physicist and Assistant Lab Manager in 1987 and in the course of my work I often wrote White Papers, Think Pieces, and Informal Proposals for Research--in addition to numerous technical reports required for our contracted and in-house research. I could initiate and circulate my own white papers, but often they were written at the request of our lab directory as topics for possible future new research. An in-house technical report would be given a Project Number the same a Research Project for an external client world--provided the internal report took more than minimal effort to prepare and print. Minimal-effort reports were not catalogued. In the case of the 1987 S&N report, I reviewed the entire report carefully, wrote the forward, approved it all; but the report was printed in Australia, so an internal SRI Project Number was not needed. It was simply an informal study report in the class of a White Paper. Ordinarily it would have circulated, been read by my lab director and been the subject of occasional in house discussions perhaps, but probably would not have raised any questions. Gerald Aardsma, then at ICR in San Diego, somehow got a copy of the report soon after it was available in printed form. He did not call me, his peer and colleague in science and in creation research, a fellow-Christian to discuss his concerns about this work. He did not call my lab director, Dr. Robert Leonard, nor the Engineering VP, Dr. David A. Johnson over him—both of whom knew me well and were aware of the many areas of interest I had as expressed in other white papers and reports. Dr. Aardsma elected to call the President of SRI! In an angry tone (I am told) he accused the Institute of conducting unscientific studies. He demanded that this one report be withdrawn. Aardsma then phoned my immediate colleague, Dr. Roger Vickers, who described Aardma as angry and on the warpath. Vickers suggested that Aardsma should have phoned me first of all. Of course the President of SRI asked to see the report, and checked down the chain of command so he could report back to Aardsma. There was no paper trail on the report and my immediate lab director had not actually read it, though he had a copy. Since the report had no official project number it could not be entered into the Library system. Finally the President of SRI was told by someone on the staff that ICR was a right-wing fundamentalist anti-evolution religious group in San Diego and should not be taken seriously on anything! ICR's good reputation suffered a good deal that day as well as my own. On top of this, major management and personnel changes were underway at the time. An entire generation of us left the Institute a few months later because shrinking business opportunities. Our lab instructor, Dr. Leonard and I left at the same time and the new director, Dr. Murray Baron decided that any further inquires about this report should be referred directly to me. There was no one on the staff at the time, he said, who had a sufficient knowledge of physics to address questions and we had no paying project pending that would allow the lab to further pursue this work. So the report should not be entereed into the Library accounting system. I next phoned Gerald Aardsma--as one Christian to another--and asked him about his concerns. I told him gently that he had done great harm to me personally in a largely secular Institution where I had worked hard for many years to build a credible Christian witness. He seemed surprised at my suggestion that out of common courtesy he should have discussed this report with me first of all. Aardsma told me that he could easily refute the claim that c was not a constant and was in fact about to publish his own paper on this. I subsequenctly asked my friend Brad Sparks, then at the Hank Hannegraaff's organization in Irvine to visit ICR and take a look at Aardma's work. Brad did so and shortly after reported to me not only that Aardsma's work was based on the faulty misuse of statistical methods, but furthermore than Aardsma would not listen to any suggestions of criticisms of his paper. It was not longer after, while speaking on creation subjects in Eastern Canada that I became good friends with government statistician Alan M. Montgomery. Alan immediately saw the flaws in Aardsma's paper and began to answer a whole flurry of attacks on the CDK hypothesis which began to appear in The Creation Research Institute Quarterly. Alan and I subsequently wrote a peer-reviewed paper which was published in Galilean Electrodynamics which pointed out that careful statistical analysis of the available data strongly suggested that c was not a constant, and neither were other constants containing the dimension of time. At that time Montgomery made every effort to answer creationist critics in CRSQ and in the Australian Creation Ex Nihilo Quarterly. All of these attacks on CDK were based on ludicrously false statistical methods by amateurs for the most part. The whole subject of cDK was eventually noticed by the secular community. Most inquirers took Aardma's faulty ICR Impact article as gospel truth and went no further. To make sure all criticisms of statistical methods were answered, Montgomery wrote a second paper, presented at the Pittsburgh Creation Conference in 1994. Alan used weighted statistics and showed once again that the case for cDK was solid--confidence levels of the order of 95% were the rule. Both Alan and I have repeatedly looked at the data from the standpoint of the size of error bars, the epoch when the measurements were made, and the method of measurements. We have tried various sets and sorts of the data, deliberately being conservative in excluding outliers and looking for experimenter errors and/or bias. No matter how we cut the cards, our statistical analyses yield the same conclusion. It is most unlikely that the velocity of light has been a constant over the history of the universe. In addition to inviting critiques of the statistics and the data, Alan and I have also asked for arguments from physics as to why c should not be a constant. And, if c were not a fixed constant, what are the implications for the rest of physics? We have as yet had no serious takers to either challenge. Just for the public record, I have placed our two reports on my web pages, along with relevant notes where it is all available for scrutiny by anyone. I have long since given up on getting much of a fair hearing from the creationist community. However I note from my web site access logs that most visitors to my web pages on this subject come from academic institutions so I have a hunch this work is quietly being considered in many physics labs.

Note added by Brad Sparks: I happened to be visiting ICR and Gerry Aardsma just before his first Acts & Facts article came out attacking Setterfield. I didn't know what he was going to write but I did notice a graph pinned on his wall. I immediately saw that the graph was heavily biased to hide above-c values because the scale of the graph made the points overlap and appear to be only a few points instead of dozens. I objected to this representation and Aardsma responded by saying it was too late to fix, it was already in press. It was never corrected in any other forum later on either, to my knowledge. What is reasonable evidence for a decrease in c that would be convincing to you? Do you require that every single data point would have to be above the current value of c? Or perhaps you require validation by mainstream science, rather than any particular type or quality of evidence. We have corresponded in the past on Hugh Ross and we seemed to be in agreement. Ross' position is essentially that there could not possibly ever be any linguistic evidence in the Bible to overturn his view that "yom" in the Creation Account meant long periods; his position is not falsifiable. This is the equivalent of saying that there is no Hebrew word that could have been used for 24-hour day in Genesis 1 ("yom" is the only Hebrew word for 24-hour day and Ross is saying it could not possibly mean that in Gen. 1). Likewise, you seem to be saying there is no conceivable evidence even possible hypothetically for a decrease in c, a position that is not falsifiable.

Response from Alan Montgomery: At the time I presented my Pittsburgh paper (1994) I looked at Humphreys paper carefully as I knew that comments on the previous analyses was going to be made mandatory. It became very apparent to me that Humphreys was relying heavily on Aardsma's flawed regression line. Furthermore, Aardsma had not done any analysis to prove that his weighted regression line made sense and was not some vagary of the data. Humphreys paper was long on opinion and physics but he did nothing I would call an analysis. I saw absolutely nothing in the way of statistics which required response. In fact, the lack of anything substantial statistical backup was an obvious flaw in the paper. To back up what he says requires that the areas where the decrease in c is observed is defined and where it is constant. If Humphreys is right the c decreasing area should be early and the c constant area should be late. There may be ambiguous areas in between. This he did not do. I repeat that Humphreys expressed an opinion but did not demonstrate statistically that it was true. Secondly, Humphreys argument that the apparent derease in c can be explained by gradualy decreasing errors was explicitly tested for in my paper. The data was sorted by error bar size and regression lines were put through the data. By the mid-twentieth century the regression lines appeared to go insignificant. But then in the post 1945 data, the decrease became significant again in complete contradiction to his hypothesis. I would ask you who has in the last 5 years explained that? Name one person!Third, the aberration values not only decrease to the accepted value but decrease even further. If Humphreys explanationis true why would those values continue to decrease? Why would those values continue to decrease in a quadratic just as the non-aberration values and why would the coefficients of the quadratic functions of a weighted regression line be almost identical and this despite the fact that the aberration and non-aberration data have highly disparate weights, centres of range and domain and weighted range and domain? Fourth, if what Humphreys claims is true why are there many exceptions to his rule? Example, the Kerr Cell data is significantly below the accepted value. Therefore according to Humphreys there should be a slow increasing trend back to the accepted value. This simply is not true. The next values take a remarkable jump and then decrease. Humphreys made not even an attempt to explain this phenomenon, Why? Humphreys paper is not at all acceptable as a statistical analysis. My statement merely reflected the truth about what he had not done. His explanation is a post hoc rationalization. An additional response from a witness to the arguments regarding the data and Setterfield's responses (or accused lack of them): I have recently talked to Mr. Setterfield and he has referenced CRSQ vol 25, March 1989, as his response to the argument brought up regarding the abberation measurements. The following two paragraphs are a the direct quote from this part of the Setterfield response to the articles published in previous editions by Aardsma, Humphreys, and Holt critiquing the Norman-Setterfield 1987 paper . This part of the response is from page 194. After having read this, I am at a loss to understand why anyone would say Setterfield has not responded regarding this issue.

References for the above: Simon Newcomb, "The Elements of the Four Inner Planets and the Fundamental Constants of Astronomy," in the Supplement to the American Ephemeris and Nautical Alamanac for 1897, p. 138 (Washington) E.T. Whittaker, "Histories of Theories of Ether and Electricity," vol. 1, pp 23, 95 (1910, Dublin) K.A. Kulikov, "Fundamental Constants of Astronomy," pp 81-96 and 191-195, translated from Russian and published for NASA by the Israel Program for Scientific Translations, Jerusalem. Original dated Moscow 1955. (Nov. 13, 1999)

Response from Alan Montgomery: This is all drivel. For example, 299792.458 ± 27.5 is drivel. 299792.458 is the speed of light – 27.5 km/sec/year is a linear change to the speed of light . The two values are apples and oranges and any plus or minus stuck between them is meaningless. Or is .000092 = .0092% change per year. This looks very small. But it is like a salesman who sells you a stove for a few dollars per day for 3 years at 18% interest to the finance company. The real question is “Is the change statistically significant?” and according to the model I produced in my ICC paper c(t) = c(0) + .03*T2 or c(t) = (1 + 10^(-7)*t2)*c(0) which is smaller than .000092 and still statistically significant. (3/17/03) additional from Alan Montgomery in reply to an additional email he received:In 1994 I presented a paper on the secular decrease in the speed of light. I used a weighted regression technique and found a quadratic which produced a regression model with significant quadratic coefficients. This has been reviewed by statisticians with Ph.D s. Some have been convinced and others Also a systematic search was made for obvious sources of the statistical decrease to find a correlation with some technique or era that might bias the statistics. The result was a systematic low value for the aberration methodology. When these values were segregated from the non-aberration values significant trends were found in both. Thus even the systematic errors that were found only reinforced the case. These figures having stood for over 10 years, I wonder why so many people still doubt the result when none have produced a better analysis or better data. Alan Montgomery

December, 2009, question and comment on the statistical use of the data

Setterfield: In the 1987 Report, I only used r, not R2. N is the number of observations involved, right? Figure 1 is the Pulkova results, as listed for two hundred years from 1740-1940, with the majority of the observations being in the second hundred years. The lines you see are the error bars (for those unacquainted with a graph like this) and show the measurements have a trend beyond the potential error in any of the given observations. This is representative of what was done and the number of total observations may well go past the tens of thousands and into the hundreds of thousands.

from Alan Montgomery: The statistical significance required is subjective. It depends on what you are using it for and how sure you have to be to take a significant action. It would be a different significance if you are selling light bulbs vs atomic reactors. Several of the tests I did in my statistical model went beyond the p<.001. November, 2014 -- Time and Length Standards and the Speed of Light

Setterfield: First, there is the matter of timing and clocks. In 1820 a committee of French scientists recommended that day lengths throughout the year be averaged, to what is called the Mean Solar Day. The second was then defined as 1/86,400 of this mean solar day, supplying science with an internationally accepted standard of time. This definition was used right up to 1956. In 1956 it was decided that the definition of the second be changed to become 1/31,556,925.97474 of the earth's orbital period that began at noon on 1st January 1900. Note that this definition of the second ensured that the second remained the same length of time as it had always been right from its earlier definition in 1820. This new 1956 definition continued until 1967 when atomic time became standard. The point to note is that in 1967 one second on the atomic clock was DEFINED as being equal to the length of the dynamical second, even though the atomic clock is based on electron transitions. This meant that the length of the second was essentially unchanged from 1820 to 1967. Interestingly, the vast majority of c measurements were made in that same period and during which time the actual length of the second had not changed. During that period there was a measured change in the value of c from at least 299,990 km/s down to about 299,792 km/s, a drop of the order of 200 km/s in 136 years. Therefore, the decline in c during that period cannot be attributed to changes in the definition of a second. As a side note it is interesting that in 1883 clocks in each town and city were set to their own local mean solar noon, so every individual city had its own local time based on the Mean Solar Day. It was the vast American railroad system that caused a change in that. On 11th October 1883, a General Time Convention of the railways divided the United States into four time zones, each of which would observe uniform time, with a difference of precisely one hour from one zone to another. Later in 1883, an international conference in Washington extended this system to cover the whole earth. In discussing the unit of length, the meter was originally introduced into France on the 22nd of June, 1799, and enforced by law on the 22nd of December 1799. This "Meter of the Archives" was the distance between the end faces of a platinum bar. In September 1889 up till 1960 the meter was defined as the distance between two engraved lines on a platinum-iridium bar held at the International Bureau of Weights and Measures in Sevres, France. This more recent platinum-iridium standard of 1889 is specifically stated to have reproduced the old meter within the accuracy then possible, namely about one part in a million. Then in 1960, the meter was re-defined in terms of the wavelength of a krypton 86 transition. The accuracy of lasers had rendered a new definition necessary in 1983. It can therefore be stated that from about 1800 up to at least 1960 there was no essential change in the length of the meter. It was during that time that c was measured as varying. As a consequence, the observed variation in c occurred while the definition of the meter was unchanged. Therefore, the measured change in c can have nothing to do with variations in the definitions of the standard meter or the standard second. These conclusions are sustained by an additional line of evidence. The aberration measurements of the speed of light are entirely independent of the standard definitions of time and length. If the decline in the value of light-speed was indeed due to different definitions, then the aberration measurements would have showed no variation in c at all as the aberration angle would be constant. This would have occurred because what is being measured is an angle not a speed or a time. The aberration angle of light from a star is equivalent to the angle by which vertically falling rain appears to slope towards a person in motion through the rain. It is called the aberration angle. That angle depends on two things: the speed of movement through the rain (as in a car) and the speed at which the rain is falling. Experiments reveal that, for a constant speed of the car, the slower the speed at which the rain is falling, the greater will be the aberration angle. In the case of the aberration of light measurements, the vertically falling rain is replaced by light coming in vertically from a star, while the car’s motion through the rain has its equivalent in the speed of the earth moving in its orbit. Since the earth’s motion in its orbit is constant, the only cause for variation in the angle is a change in the speed of light. Thus, as the measurements of the aberration angle of light from selected stars reveal from 100 years of observations at Pulkovo Observatory, the aberration angle increased systematically. If it were merely a change in definition of time or length standards, there would have been no change in the measured angle – that would be independent of those definitions. So the fact that the aberration measurements also show a decline in the speed of light is confirmation that the effect is absolutely genuine.

Setterfield:As far as the speed of light itself is concerned, it was declared an absolute universal constant in October of 1983. No measurements have been made of that quantity since then. As a result, we have no direct record of its subsequent behavior. However, the work that I have done has shown that there are a number of other associated constants which are changing synchronously with the speed of light, and measurements of these quantities has continued. The last official determinations of these associated constants was done in 2010 and showed that variation was continuing. I have published all these results in a peer-reviewed Monograph entitled "Cosmology and the Zero Point Energy". It was published in July of 2013 by the Natural Philosophy Alliance (NPA) as part of their Monograph Series and was presented at their Conference that year. Other recent articles on this and related matters can be found on our website under research papers. Therefore, far from being disproven, the data have been set into a wider context which shows the reason for the variation in the speed of light and all other associated constants. As such, the whole proposition is far more firmly based than ever before. I hope that answers your questions. If you have any further queries, please get back to me. |