Astronomical DiscussionIn 1998, Barry Setterfield wrote the following: Notes on a Static Universe: Incredibly, an expanding universe does imply an expanding earth on most cosmological models that follow Einstein and or Friedmann. As space expands, so does everything in it. This is why, if the redshift signified cosmological expansion even the very atoms making up the matter in the universe would also have to expand. There would be no sign of this in rock crystal lattices etc since everything was expanding uniformly as was the space between them. This expansion occurred at the Creation of the Cosmos as the verses you listed have shown. It is commonly thought that the progressive redshift of light from distant galaxies is evidence that this universal expansion is still continuing. However, W. Q. Sumner in Astrophysical Journal 429:491-498, 10 July 1994, pointed out a problem. The maths indeed show that atoms partake in such expansion, and so does the wavelength of light in transit through space. This "stretching" of the wavelengths of light in transit will cause it to become redder. It is commonly assumed that this is the origin of the redshift of light from distant galaxies. But the effect on the atoms changes the wavelength of emitted light in the opposite direction. The overall result of the two effects is that an expanding cosmos will have light that is blue-shifted, not red-shifted as we see at present. The interim conclusion is that the cosmos cannot be expanding at the moment (it may be contracting). Furthermore, as Arizona astronomer William Tifft and others have shown, the redshift of light from distant galaxies is quantised, or goes in "jumps". Now it is uniformly agreed that any universal expansion or contraction does not go in "jumps" but is smooth. Therefore expansion or contraction of the cosmos is not responsible for the quantisation effect: it may come from light-emitting atoms. If this is so, cosmological expansion or contraction will smear-out any redshift quantisation effects, as the emitted wavelengths get progressively "stretched" or "shrunk" in transit. The final conclusion is that the quantised redshift implies that the present cosmos must be static after initial expansion. [Narliker and Arp proved that a static matter-filled cosmos is stable against collapse in Astrophysical Journal 405:51-56 (1993)]. Therefore, as the heavens were expanded out to their maximum size, so were the earth, and the planets, and the stars. I assume that this happened before the close of Day 4, but I am guessing here. Following this expansion event, the cosmos remained static. (Barry Setterfield, September 25, 1998) In 2002, he authored the article "Is the Universe Static or Expanding", in which he presents further research on this subject.

Setterfield: As far as the Hubble Law is concerned, all Hubble did was to note that there was a correspondence between the distance of a galaxy and its redshift. His Law did not establish universal expansion. That interpretation came shortly after as a result of Einstein's excursions with relativity and the various possible cosmologies it opened up. His work suggested a Doppler shift which required the redshift, z, to be multiplied by the speed of light, c. This then gave rise to the "recession velocity" of the galaxies through static space. Hubble was always cautious about using this device and expressed his misgivings even as late as the 1930's. However, Friedman and Lemaitre came along with a different approach using a refinement of Einstein's equations in which the galaxies were static and the space between them was expanding, and stretching light waves in transit to give a redshift. There are arguments which show that both these interpretations have their shortcomings, so for the serious student, the redshift cannot be used unequivocally to point to universal expansion. Into this scenario, the work of William Tifft and others starting 1976 threw an additional factor into the mix. This was the evidence that the redshift is quantized or goes in jumps. If the galaxies are racing away from each other, that cannot happen with the velocity going in jumps. Its like a car accelerating down the highway, but only increasing its speed and traveling in multiples of 5 miles per hour. It just cannot happen. If the fabric of space itself is the cause of the redshift, then it is equally impossible for space to expand in jumps. It is for this reason that mainstream astronomers try to discredit or reject this redshift quantization. My article, Zero Point Energy and the Red Shift, is another approach which places the cause of the redshift with every atomic emitter whose energy is lower when the ZPE strength was lower. The evidence suggests that the ZPE strength has increased with time. For these reasons, it is legitimate to associate the redshift with distance but not with universal expansion. The work of Halton Arp suggests that there may be exceptions to that, but there is additional evidence that his approach may have deficiencies. It is this evidence that I would like to throw into the mix as well. I do this, not to discredit Arp but to show that there is an entirely different way of looking at this problem. It has been presented on a number of occasions, including in the NPA Conference Proceedings. The articles there were authored by Lyndon Ashmore. The first was in the Proceedings for 2010 page 17-22 and entitled "An explanation of a redshift in a static universe." The basic proposition goes like this: There are hydrogen clouds more or less uniformly distributed throughout the cosmos. If the cosmos is expanding, these clouds should be getting further and further apart. Therefore, when we look back at the early universe, that is looking at very distant objects, we should see the hydrogen clouds much closer together than in our own region of space. If the universe is static, the average distance between cloud should be approximately constant. We can tell when light goes through a hydrogen cloud by the Lyman Alpha absorption lines in the spectrum. This leaves its signature in the spectrum of any given galaxy. The further away the galaxy, the more Lyman alpha lines there are as the light has gone through more hydrogen clouds. These lines are at a position in the spectrum that corresponds to the cloud's redshift. Thus, for very distant objects, there is a whole suite of Lyman Alpha lines starting at a redshift corresponding to the object's distance and then at reducing redshifts until we come to our own galactic neighborhood. This suite of lines is called the Lyman Alpha forest. The testimony of these lines is interesting. From a redshift, z = 6 down to a redshift of about z = 1.6 the lines get progressively further apart, indicating expansion. From about z = 1.6 down to z = 0 the lines are essentially at a constant distance apart. This indicates that the cosmos is now static after an initial expansion, which ceased at a time corresponding to a redshift of z = 1.6. These data are a problem for Arp because, if the quasars are relatively nearby objects, there are nowhere near enough hydrogen clouds between us and the quasar to give the forest of lines that we see. This tends to suggest that the quasars really are at great distances. Again, the original article regarding this is still appropriate.

Setterfield: The evidence that an accelerating expansion is occurring comes because distant objects are in fact further away than anticipated given a non-linear and steeply climbing red-shift/distance curve. Originally, the redshift/distance relation was accepted as linear until objects were discovered with a redshift greater than 1. On the linear relation, this meant that the objects were receding with speeds greater than light, a no-no in relativity. So a relativistic correction was applied that makes the relationship start curving up steeply at great distances. This has the effect of making large redshift changes over short distances. Now it is found that these objects are indeed farther away than this curve predicts, so they have to drag in accelerating expansion to overcome the hassle. The basic error is to accept the redshift as due to an expansion velocity. If the redshift is NOT a velocity of expansion, then these very distant objects are NOT travelling faster than light, so the relativistic correction is not needed. Given that point, it becomes apparent that if a linear redshift relation is maintained throughout the cosmos, then we get distances for these objects that do not need to be corrected. That is just what my current redshift paper does. (January 12, 1999)

Setterfield: Recent aberration experiments have shown that distant starlight from remote galaxies arrives at earth with the same velocity as light does from local sources. This occurs because the speed of light depends on the properties of the vacuum. If we assume that the vacuum is homogeneous and isotropic (that it has the same properties uniformly everywhere at any given instant), then light-speed will have the same value right throughout the vacuum at any given instant. The following proposition will also hold. If the properties of the vacuum are smoothly changing with time, then light speed will also smoothly change with time right throughout the cosmos. On the basis of experimental evidence from the 1920's when light speed was measured as varying, this proposition maintains that the wavelengths of emitted light do not change in transit when light-speed varies, but the frequency (the number of wave-crests passing per second) will. The frequency of light in a changing c scenario is proportional to c itself. Imagine light of a given earth laboratory wavelength emitted from a distant galaxy where c was 10 times the value it has now. The wavelength would be unchanged, but the emitted frequency would be 10 times greater as the wave-crests are passing 10 times faster. As light slowed in transit, the frequency also slowed, until when it reaches earth at c now, the frequency would be the same as our laboratory standard as well as the wavelength. Trust that this reply answers your question. (June 15, 1999)

Missing Mass and Dark Matter?

Setterfield: Thank you for your questions; let me see what I can do to answer them. Firstly about missing mass. There are two reasons why astronomers have felt that mass is "missing". The first is due to the apparent motion of galaxies in clusters. That motion in the outer reaches of the clusters appears to be far too high to allow the clusters to hold together gravitationally unless there is extra mass somewhere. However, that apparent motion is all measured by the redshift of light from those galaxies. Tifft's work with the quantised redshift indicates that redshift is NOT a measure of galaxy motion at all. In fact, motion washes out the quantisation. On that basis, the whole foundation on which the missing mass in galaxy clusters is built is faulty, as there is very little motion of galaxies in clusters at all. The second area where astronomers have felt that mass is "missing" is due to the behaviour of rotation rates of galaxies as you go out from their centres. The behaviour of the outer regions of galaxies is such that there must be more mass somewhere there to keep the galaxies from flying apart as their rotation rate is so high. It seems that there might indeed be a large envelope of matter in transparent gaseous form around galaxies that could account for this discrepancy. Alternatively, some astronomers are checking for Jupiter sized solid objecdts in the halo of our galaxy that could also overcome for the problem. A third possibility is that the Doppler equations on which everything is based may be faulty at large distances due to changes in light speed. I am looking into this.

Setterfield: The entire discussion about dark energy and dark matter is based, at least partly, on a mis-interpretation of the redshift of light from distant galaxies. But before I elaborate on that, let me first specifically address a recent article in Science (“Breakthrough of the Year: Illuminating the Dark Universe” Charles Seife, Science 302, 2038-2039, 2003) espousing the proof for dark energy. I find this amazing in view of the recent data that has come in from the European Space Agency's (ESA) X-ray satellite, the XMM-Newton. According to the ESA News Release for December 12, 2003 the data reveal "puzzling differences between today's clusters of galaxies and those present in the Universe around seven thousand million years ago." The news release says that these differences "can be interpreted to mean that 'dark energy' which most astronomers now believe dominates the universe simply does not exist." In fact, Alain Blanchard of the Astrophysical Observatory in the Pyrenees says the data show "There were fewer galaxy clusters in the past". He goes on to say that "To account for these results you have to have a lot of matter in the Universe and that leaves little room for dark energy." In other words, we have one set of data which can be interpreted to mean that dark energy exists, while another set of data suggests that it does not exist. In the face of this anomaly, it may have been wiser for Science to have remained more circumspect about the matter. Unfortunately, the scientific majority choose to run with an interpretation they find satisfying, and tend to marginalize all contrary data. I wonder if the European data may not have been published by the time that Science went to print on the issue. Thus there may be some embarrassment by these later results, and they may be marginalized as a consequence. The interpretation being placed on the WMAP observations of the microwave background is that it is the "echo" of the Big Bang event, and all other data is interpreted on this basis. But Takaaki Musha from Japan pointed out in an article in Journal of Theoretics (3:3, June/July 2001) that the microwave background may well be the result of the Zero Point Energy allowing the formation of virtual tachyons in the same way that it allows the formation of all other kinds of virtual particles. Musha demonstrated that all the characteristics of the microwave background can be reproduced by this approach. In that case, the usual interpretation of the WMAP data is in error and the conclusions drawn from it should be discarded and a different set of conclusions deduced. However,

the whole dark matter/dark energy discussion points to the fact that anomalies

exist which current theory did not anticipate. Let me put both of these problems

in context. Dark matter became a necessity because groups of galaxies seemed

to have individuals within the group which appeared to be moving so fast that

they should have escaped long ago if the cosmos was 14 billion years old. If the

cosmos was NOT 14 billion years old but, say, only 1 million or 10,000 years

old, the problem disappears. However, another pertinent answer also has been

elaborated by Tifft and Arp and some other astronomers,

but the establishment does not like the consequences and tends to ignore them as

a result. The answer readily emerges when it is realized that the rate of movement

of the galaxies within a cluster is measured

by their redshift. The implicit assumption is that the redshift is a measure

of galaxy motion. Tifft and Arp pointed out that the quantized redshift meant

that the redshift was not intrinsically a measure

of motion all but had another origin. They pointed out that, in the centre of

the Virgo cluster of galaxies, where motion would be expected to be greatest

under gravity, the ACTUAL motion of the galaxies smeared

out the quantization. If actual motion does this, then the quantized redshift

exhibited by all the other galaxies further out means

that there is very little actual motion of those galaxies at all. This lack of

motion destroys the whole basis of the missing matter argument and the necessity

for dark matter then disappears. The whole missing matter or dark matter problem

only arises because it is in essence a mis-interpretation of what the redshift

is all about.

In a

similar fashion, the whole dark energy (or necessity for the cosmological

constant) is also a redshift problem. It arises because there is a breakdown in

the redshift/distance relationship at high redshifts. That formula is based on

the redshift being due to the galaxies racing away from us with high velocities.

Distant supernovae found up to 1999 proved to be even more distant than the

redshift/distance formula indicated. This could only be accounted for on the

prevailing paradigm if the controversial cosmological constant (dark energy)

were included in the equations to speed up the expansion of the universe with

time. The fact that these galaxies were further away than expected was taken as

proof that the cosmological constant (dark energy) was acting. Then in October

this year, Adam Riess announced that there were 10 even more distant supernovae

whose distance was CLOSER than the redshift relation predicted. With a deft

twist, this was taken as further proof for the existence of dark energy. The

reasoning went that up to a redshift of about 1.5 the universal expansion was

slowing under gravity. Then at that point, the dark energy repulsion became

greater than the force of gravity, and the expansion rate progressively speeded

up.

What in fact we are looking at is again the mis-interpretation of the redshift. Both dark matter and dark energy hinge on the redshift being due to cosmological expansion. If it has another interpretation, and my latest paper being submitted today [late December, 2003] shows it does, then the deviation of distant objects from the standard redshift/distance formula is explicable, and the necessity for dark matter also disappears. Furthermore, the form of the actual equation rests entirely on the origin of the Zero Point Energy. The currently accepted formula can be reproduced exactly as one possibility of several. But the deviation from that formula shown by the observational evidence is entirely explicable without the need for dark energy or dark matter or any other exotic mechanism. I trust that this gives you a feel for the situation.

More on Dark Matter and Dark Energy Question: I have just recently come across your work and feel like I have come home! For years I have squared the accounts of the bible and science (being from a science backgound myself) by passing Genesis off (in particular) as 'allegorical' and always telling christian friends that we must not ignore the scientific evidence. I was uncomfortable with all the scientific explanations, though, and suspected things were not quite right. I was aware that data was data and that the scientific explanations throughout history had been modified (in some cases drastically) in the light of new evidence. Hence, the Newtonian view becoming the Einsteinian view. So I was left with..'well this is what I currently believe until such time as new evidence indicates otherwise'.Setterfield: It is a delight that you have found us, and many thanks for the encouragement it gives to us as well! You have had quite a journey, but the Lord has brought you to some sort of haven of rest. We trust that you will be able to absorb what we have put on our website and share it with others as the Lord directs. As for your specific questions, I would like to deal with the missing mass/dark matter problem. This really is a deep problem for gravitational astronomers. Currently these is no assured answer from that direction except to modify the laws of gravity and so on - all very messy procedures. However, what must be realized is that there are two aspects to the problem. The first that was noted was the missing mass in galaxy clusters. What was happening was that the redshifts of light from these galaxy clusters was being measured and interpreted as a Doppler shift, like the drop in pitch of a police siren passing you. The redshift on this basis was taken to mean that galaxies are racing away from us. When the average redshift of a cluster of galaxies was taken, and then the highest redshifts of galaxies in the cluster were compared with that, then, if the redshift was due to motion, these high redshift galaxies were moving so fast they would have escaped the cluster long ago and moved out on their own. As a result, since they are obviously still part of the cluster, there must be a lot of additional material in the cluster to give a gravitational field strong enough to hold the cluster together. The whole chain of reasoning depends on the interpretation of the redshift as galaxy motion. In contra-distinction to this interpretation of the redshift, the Zero Point Energy approach indicates that the redshift is simply the result of a lower ZPE strength back in the past which affects atomic orbits so they emit redder light (probably in quantum steps as indicated by Tifft and others). On that basis, the redshift has nothing to do with velocity, so the galaxy clusters have no mass "missing" and hence no "dark matter." We can see the actual motion of galaxies in the center of clusters where the motion actually washes out the quantization, so we are on the right track there. On this approach, the clusters of galaxies are "quiet" without high velocities that pull them apart. The second reason for the search for "missing mass/dark matter" is the actual rotation of individual galaxies themselves.As we look at our solar system, the further out the planet is from the sun, the slower it moves around the sun, because of the Laws of gravity. Astronomers expected the same result when they looked at the velocities of stars in the disks of galaxies as they moved around the center of those galaxies. That is, they expected the stars in the outer part of the disk would move at a slower pace than stars nearer the center. They dont! The stars in the outer part of the disk orbit with about the same velocity as those closer in. For this to happen on the gravitational model, there must be some massive amounts of material in and above/below the disk that we cannot see to keep the rotation rate constant. Since we have been unable to find it, we call it missing matter or dark matter. That is the conundrum. Another suggestion is that the laws of gravity need amending. However, if all galaxies were formed by a plasma process, as indicated by Anthony Peratt's simulations, then the problem is solved. In the formation of galaxies by plasma physics, Peratt has shown that the miniature galaxies produced in the laboratory spin in exactly the same way as galaxies do in outer space. No missing mass is needed; no modification to the laws of gravity. All that is needed is to apply the laws of plasma physics to the formation of galaxies, not the laws of gravitation. These laws involve electro-magnetic interaction, not gravitational interaction. The two are vastly different and the electro-magnetic interactions are up to 1039 times more powerful than gravity! Things can form much more quickly by this mechanism as well, which has other implications in the origins debate. Well, that should give you some things to digest for the moment. You will have other questions. Get back to me when you want answers, and I will do my best.

Setterfield: Many thanks for keeping us informed on this; it is appreciated! The whole scenario with dark matter comes from the rotation rate of galaxy arms. If it is gravitational - the dark matter must exist. If galaxies rotate under the influence of plasma physics, nothing is missing - it is all behaving exactly as it should. Unfortunately gravitational astronomers have painted themselves into a corner with this one and cannot escape without losing a lot of credibility. The plasma physicists are having a good laugh.

Setterfield: Original answer -- You ask where the radiation etc

disappears to when it gets trapped inside a black hole. The quick answer is that

it becomes absorbed into the fabric of space, as that fabric is made up of

incredibly tiny Planck particle pairs that are effectively the same density as

the black holes. The trapped radiation cannot travel across distances shorter

than those between the Planck particles, and so gets absorbed into the vacuum.

This brings us to your question of what a black hole really is. It essence, it

is a region of space that has the same density as the Planck particle pairs that

make up the fabric of space. It seems that these centres of galaxies may have

formed as an agglomeration of Planck particle pairs at the inception of the

universe and acted as the nucleus around which matter collected to form

galaxies.

February, 2012 -- Since the above answer was written, there have been some developments in astronomy as a result of plasma physics and experiments conducted in plasma laboratories. In the laboratories, a spinning disk with polar jets can be readily produced as a result of electric currents and magnetic fields. The principle on which they operate is basically the same as the spinning disk of your electricity meter. What we see with "black holes" is the spinning disk and the polar jets. We measure the rate of spin of the disk and from that gravitational physics allows us to deduce that there is a tremendous mass in the center of the disk. This is where the idea of a black hole came from. However, with plasma physics, the rate of spin of the disk is entirely dependent upon the strength of the electric current involved. Plasma physics shows that there is a galactic circuit of current for every galaxy. As a consequence of this, and the experimental evidence we have, plasma physics does not have the necessity for a black hole to explain what we are seeing. It can all be explained by electric currents and magnetic fields. In reviewing this evidence, I came to the conclusion that plasma physics, applied to astronomy, is the better way to go, and that gravitational astronomy has caused itself some considerable problems in this and other areas. For example, in the case of our own galaxy, and the suspected black hole at its center, it has been deduced from the motion of objects in the vicinity that the mass of the black hole is such that it should cause gravitational lensing of objects in the area. We have searched for gravitational lensing for over two decades and none has been found -- despite extensive searches. This indicates that it is not a black hole we have in the center of our galaxy. Keep in mind no one has ever seen a black hole. It is simply a necessary construct if gravity is to be considered the main force forming and maintaining objects in the universe. However, gravity is a very weak force, especially when compared to electromagnetism, which is what we have seen out there in the form of vast plasma filaments.

Setterfield: Astronomically, many of the important distant Quasars turn out to be the

superluminous, hyper-active centres of galaxies associated with a supermassive

black hole. There is a mathematical relationship between the size of the black

hole powering the quasar and the size of the nucleus of any given galaxy. As a

result of this relationship, there is a debate going on as to whether the

quasar/black hole came first and the galaxy formed around it, or vice versa.

Currently, I tend to favour the first option. Nevertheless, which ever option

is adopted, the main effect of dropping values of c on quasars is that as c

decays, the diameter of the black hole powering the quasar will progressively

increase. This will allow progressive engulfment of material from the region

surrounding the black hole and so should feed their axial jets of ejected

matter. This is the key prediction from the cDK model on that matter.

As far as pulsars are concerned, there recently has been some doubt cast on the accepted model for the cause of the phenomenon that we are observing. Until a short time ago, it was thought that the age of a pulsar could be established from the rotation period of the object that was thought to give rise to the precisely timed signal. However, recent work on two fronts has thrown into confusion both the model for the age of the pulsar based on its rotation period, and also actual cause of the signal, and hence what it is that is rotating. Until these issues can be settled, it is difficult to make accurate predictions from the cDK model.

Question: I've been asked why distant pulsars don't show a change in their rotation rate if the speed of light is slowing. Do you have an explanation? If you have any information on whether their observed rate of change should change with a slowing of c I would appreciate it.Setterfield: Thanks for your question about pulsars. There are several aspects to this. First of all, pulsars are not all that distant, the furthest that we can detect are in small satellite galaxies of our own Milky Way system. Second, because the curve of lightspeed is very flat at those distances compared with the very steep climb closer to the origin, the change in lightspeed is small. This means that any pulsar slowdown rate originating with the changing speed of light is also small. The third point is that the mechanism that produces the pulses is in dispute as some theories link the pulses with magnetic effects separate from the star itself, so that the spin rate of the host star may not be involved. Until this mechanism is finally determined, the final word about the pulses and the effects of lightspeed cannot be given. If you have Cepheid variables in mind, a different situation exists. The pulsation, that gives the characteristic curve of light intensity variation, is produced by the behaviour of a thin segment near the star's surface layer. The behaviour of this segment of the star's outer layers is directly linked with the speed of light. This means that any slow-down effect of light in transit will already have been counteracted by a change in the pulsation rate of this layer at the time of emission. The final result will be that any given Cepheid variable will appear to have a constant period for the light intensity curve, no matter where it is in space and no matter how long we observe it. Comment: Dr. Tom Bridgman website shows in calculus the metric for canceling out the red-shift. Even more important is the metric he shows for kinematic argument using pulsar periods out to a distance of 1,000 parsecs from earth. According to Tom, you have very observable effects in pulsar periods using c-decay that is way off so c-decay is not taking place within 1,000 parsecs of the earth. Setterfield: Yes. As far as pulsars are concerned within 1000 parsecs of the earth, there should only be very, very minimal effects due to a changing c. In fact, at that distance, the change in c would be so small as to not even give rise to any redshift effects at all. The first redshift effect comes at the first quantum jump which occurs beyond the Magellanic Clouds. This shows that the rate at which c is climbing across our galaxy is very, very small. Consequently, any effect with pulsars within our galaxy is going to be negligible. Recently the reason for the spindown rate in pulsars has

been seriously questioned, and new models for pulsar behaviour will have to be

examined. See, for example, New Scientist 28 April, 2001, page 28. There are

other references which I do not have on hand at the moment, but which document

other problems as well. Until a viable model of pulsar behaviour and the cause

of the pulsars themselves has been finalised, it is difficult to make any

predictions about how the speed of light is going to affect them.

I am curious about the metric that Bridgman is using,

because I show the change in wavelength over the wavelength (which is the

definition of the redshift) is, in fact, occurring.

Response: Dr. William T. (Tom) Bridgman is using kinematics and a calculus formula to shoot down your c-decay metric. He even uses your predicted c-decay curve based upon the past 300 years or so of measurments for c and incorporates this into the pulsar changes that he claims should be observed. if correct then c-decay did not take place within past 300 years or even out to distances of 1 kiloparsec Setterfield: The curve that Tom is using for pulsar analysis is outdated and no longer applicable to the situation as it dates from the 1987 paper. The recent work undergoing review indicates a very different curve which includes a slight oscillation. This oscillation means that there is very little variability in light speed out to the limits of our galaxy. Thus, even if the rest of his math were correct, and the behaviour of pulsars were known accurately, Tom's conclusions are not valid. I suggest that he some of the more recent material before attempting fireworks. Response: If c-decay has predictable and observable side effects like pulsar timing changes, changes in eclipses of Jupiter's and Saturn's moons, and also changes in stellar occultations in the ecliptic, these should be rigourously tested to see if they support or deny c-decay. At the moment Tom's metric denies c-decay as published based upon the 1987 c-decay curve Setterfield: I have examined pulsar timing changes in detail and responded some years ago to that so-called "problem". Any observed changes are well within the limits predicted by this variable light-speed (Vc) model just introduced. One hostile website used the pulsar argument for a while until I pointed out their conceptual error to a friend and then it was deleted and has not appeared again since. I suspect that Tom is making the same error. The changes in the eclipse times of Jupiter's and Saturn's moons have in fact been used as basic data in the theory. Stellar occultations along the ecliptic have also been used as data based on the work of Tom van Flandern who studied the interval 1955-1981. He then came to the conclusion: "the number of atomic seconds in a dynamical interval is becoming fewer. Presumably, if the result has any generality to it, this means that atomic phenomena are slowing down with respect to dynamical phenomena..." In this case the eclipse times and the occultations were used to build the original model and as such are not in conflict with it.

Setterfield: Thanks for your question and thanks for drawing my attention to this website again. I have been mentioned many times on that site, not usually in a good context! This article is based on the majority model for pulsars among astronomers today. If that model is not correct, then neither are the conclusions which that website has drawn. So we need to examine the standard model in detail. On that model we have a rapidly rotating, small and extremely dense neutron star which sends out a flash like a lighthouse every time it rotates. Rotation times are extremely fast on this model. In fact, the star is only dense enough to hold together under the rapid rotation if it is made up of neutrons. Those two facts alone present some of the many difficulties for astronomers holding to the standard model. Yet despite these difficulties, the model is persisted with and patched up as new data comes in. Let me explain. First a number of university professionals have difficulty with the concept of a star made entirely of neutrons, or neutronium. In the lab, neutrons decay into a proton and electron in something under 14 minutes. Atom-like collections of two or more neutrons disrupt almost instantaneously. Thus the statement has been made that "there can be no such entity as a neutron star. It is a fiction that flies in the face of all we know about elements and their atomic nuclei." [D.E. Scott, Professor & Director of Undergraduate Program & Assistant Dept. Head & Director of Instructional Program, University of Massachusetts/Amherst]. He, and a number of other physicists and engineers remain unconvinced by the quantum/relativistic approach that theoretically proposed the existence of neutronium.They point out that it is incorrect procedure to state that neutronium must exist because of the pulsars behavior; that is circular reasoning. So the existence of neutronium itself is the first problem for the model. Second, there is the rapid rate of rotation. For example, X-ray pulsar SAX J1808.4-3658 flashes every 2.5 thousanths of a second or about 24,000 revs per minute. This goes way beyond what is possible even for a neutron star. In order for the model to hold, this star must have matter even more densly packed than neutrons, so "strange matter" was proposed. Like neutronium, strange matter has never been actually observed, so at this stage it is a non falsifiable proposition. So the evidence from the data itself suggests that we have the model wrong. If the model is changed, we do not need to introduce either the improbability of neutronium or the even worse scenario of strange matter. Third, on 27th October, 2010, in "Astronomy News," a report from NRAO in Socorro, New Mexico was entitled "Astronomers discover most massive neutron star yet known." This object is pulsar PSR J1614-2230. It "spins" some 317 times per second and, like many pulsars, has a proven companion object, in this case, a white dwarf. This white dwarf orbits in just under 9 days. The orbital characteristics and data associated with this companion shows that the neutron star is twice as massive as our sun. And therein lies the next problem. Paul Demorest from NRAO in Tucson stated: "This neutron star is twice as massive as our Sun. This is surprising, and that much mass means that several theoretical models for the internal composition of neutron stars are now ruled out. This mass measurement also has implications for our understanding of all matter at extremely high densities and many details of nuclear physics." In other words, here is further proof that the model is not in accord with reality. Rather than rethink all of nuclear physics and retain the pulsar model, it would be far better to retain nuclear physics and rethink what is happening with pulsars. In rethinking the model, the proponents of one alternative that has gained some attention point out some facts about the pulse characteristics that we observe in these pulsars. (1) The duty cycle is typically 5% so that the pulsar flashes like a strobe light. The duration of each pulse is only 5% of the length of time between pulses. (2) Some individual pulses vary considerably in intensity. In other words, there is not a consistent signal strength. (3) The pulse polarization indicates that it has come from a strong magnetic field. Importantly, all magnetic fields require electric currents to generate them. These are some important facts. Item (2) alone indicates that the pulsar model likened to a lighthouse flashing is unrealistic. If it was a neutron star with a fixed magnetic field, the signal intensity should be constant. So other options should be considered. Taken together, all these characteristics are typical of an electric arc (lightning) discharge between two closely spaced objects. In fact electrical engineers have known for many years that all these characteristics are typical of relaxation oscillators. In other words, in the lab we can produce there precise characteristics in an entirely different way. This way suggests a different, and probably more viable model. Here is how D.E. Scott describes it:

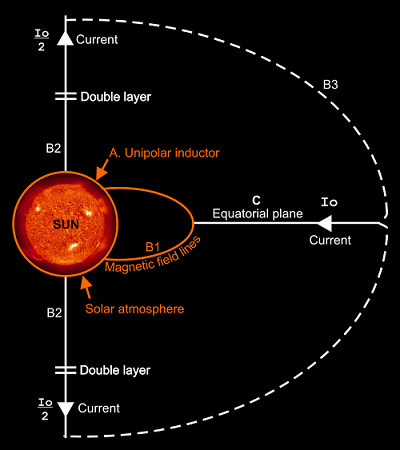

The paper outlining these proposals was entitled "Radiation Properties of Pulsar Magnetospheres: Observation, Theory and Experiment" and appeared in Astrophysics and Space Science 227(1995):229-253. Another paper outlining a similar proposal using a white dwarf star and a nearby planet instead of a double star system was published by Li, Ferrario and Wickramasinghe. It was entitled "Planets Around White Dwarfs" and appeared in the Astrophysical Journal 503:L151-L154 (20 August 1998). Figure 1 is pertinent in this case. Another paper by Bhardwaj and Michael, entitled the "Io-Jupiter System: A Unique Case of Moon-Planet Interaction" has a section devoted to exploring this effect in the case of Binary stars and Extra-Solar Systems. An additional study by Bhardwaj et al also appeared in Advances in Space Research vol 27:11 (2001) pp. 1915-1922. The whole community of plasma physicists and electrical engineers in the IEEE accept these models or something similar for pulsars rather than the standard neutron star explanation. Well, where is this heading? The question involved the slow-down in the speed of light in the context of pulsars and their "rotation rate." If pulsars ar not rotating neutron stars at all, but rather involve a systematic electrical discharge in a double star or star and planet system with electric currents in a plasma or dusty disk, then the whole argument breaks down. In fact if the electric duscharge model is followed, then the paper on "Reviewing a Plasma Universe with Zero Point Energy" is extremely relevant. The reason is that an increasing ZPE not only slows the speed of light, but also reduces voltages and electric current strengths. When that is factored into the plasma model for pulsars the rate of discharge seen from earth will remain constant, as the slow-down of light cancels out the initial faster rate of discharge in the pulsar system when currents were higher. I hope that this answers your question in a satisfactory manner.

Setterfield: Thanks for your further question. It is true that they try to overcome the difficulties with the thin surface of protons and electrons...etc. However, this is an entirely theoretical concept for which there is no physical evidence. The physical evidence we have comes from the existence of protons, neutrons and electrons in atoms and atomic nuclei. When we consider the known elements, even the heavy man-made elements as well, there is a requirement that, in order to hold a group of neutrons together in a nucleus, an almost equal number of proton-electron pairs are required. The stable nuclei of the lighter elements contain approximately equal numbers of neutrons and protons. This gives a neutron/proton ratio of 1. The heavier nuclei contain a few more neutrons than protons, but the absolute limit is close to 1.5 neutrons per proton. Nuclei that differ significantly from this ratio spontaneously undergo radioactive decay that changes the ratio so that it falls within the required range. Groups of neutrons are therefore not stable by themselves. That is the hard data that we have. If theory does not agree with that data, then there is something wrong with the theory. Currently, the theoretical approach that allows neutronium to exist flies in the face of atomic reality. It has only been accepted so that otherwise anomalous phenomena like pulsars can be explained by entrenched concepts. It is these concepts that need updating as well as the theoretical approaches that support them. It is only as we adopt concepts and theories that are rooted in reality and hard data that we get closer to the scientific truth of a situation. It is for this reason that the motto on our website reads "Letting data lead to Theory." I trust that you appreciate the necessity for that. For more discussion on pulsars, see Pulsars and Problems Setterfield: In Physical Review Letters published on 27 August 2001 there appeared a report from a group of scientists in the USA, UK and Australia, led by Dr. John K. Webb of the University of New South Wales, Australia. That report indicated the fine-structure constant, a, may have changed over the lifetime of the universe. The Press came up with stories that the speed of light might be changing as a consequence. However, the change that has been measured is only one part in 100,000 over a distance of 12 billion light-years. This means that the differences from the expected measurements are very subtle. Furthermore, the complicated analysis needed to disentangle the effect from the data left some, like Dr. John Bahcall from the Institute for Advanced Study in Princeton, N.J., expressing doubts as to the validity of the claim. This is further amplified because all the measurements and analyses have been done at only one observatory, and may therefore be the result equipment aberration. Other observatories will be involved in the near future, according to current plans. This may clarify that aspect of the situation. The suggested change in the speed of light in the Press articles was mentioned because light-speed, c, is one of the components making up the fine-structure constant. In fact K. W. Ford, (Classical and Modern Physics, Vol. 3, p.1152, Wiley 1974), among others gives the precise formulation as a = e2/(2ehc) where e is the electronic charge, e is the electric permittivity of the vacuum, and h is Planck’s constant. In this quantity a, the behaviour of the individual terms is important. For that reason it is necessary to make sure that the e term is specifically included instead of merely implied as some formulations do. Indeed, I did not specifically include it in the 1987 Report as it played no part in the discussion at that point. To illustrate the necessity of considering the behaviour of individual terms, the value of light-speed, c, has been measured as decreasing since the 17th or 18th century. Furthermore, while c was measured as declining during the 20th century, Planck’s constant, h, was measured as increasing. However, deep space measurements of the quantity hc revealed this to be invariant over astronomical time. The data obtained from these determinations can be found tabulated in the 1987 Report The Atomic Constants, Light, and Time by T. Norman and B. Setterfield. Since c has been measured as declining, h has been measured as increasing, and hc shown to be invariant, the logical conclusion from this observational evidence is that h must vary precisely as 1/c at all times. If there is any change in a, this observational evidence indicates it can only originate in the ratio e2/e. This quantity is discussed in detail in the paper "General Relativity and the Zero Point Energy."

Setterfield: In answer

to the question, the quantity being measured here is the fine structure

constant, alpha. This is made up of 4 other atomic quantities in two groups of

two each. The first is the product of Planck's constant, h, and the speed

of light, c. Since the beginning of this work in the 1980's it has been

demonstrated that the product hc is an absolute constant. That is to say

it is invariant with all changes in the properties of the vacuum. Thus, if h goes up, c goes down in inverse proportion. Therefore, the fine structure

constant, alpha, cannot register any changes in c or h individually, and, as we have just pointed out, the product hc is also

invariant.

As a consequence, any changes in alpha must come from the other ratio involved, namely the square of the electronic charge, e, divided by the permittivity of free space. Our work has shown that this ratio is constant in free space. However, in a gravitational field this ratio may vary in such a way that alpha increases very slightly. If there are any genuine changes in alpha, this is the source of the change. The errors in measurement in the article that raised the query cover the predicted range of the variation on our approach. The details of what we predict and a further discussion on this matter are found in our article in the Journal of Theoretics entitled "General Relativity and the Zero Point Energy."

Setterfield: It has been stated on a number of occasions that Supernova 1987A in the Large Magellanic Cloud (LMC) has effectively demonstrated that the speed of light, c, is a constant. There are two phenomena associated with SN1987A that lead some to this erroneous conclusion. The first of these features was the exponential decay in the relevant part of the light-intensity curve. This gave sufficient evidence that it was powered by the release of energy from the radioactive decay of cobalt 56 whose half-life is well-known. The second feature was the enlarging rings of light from the explosion that illuminated the sheets of gas and dust some distance from the supernova. We know the approximate distance to the LMC (about 165,000 to 170,000 light years), and we know the angular distance of the ring from the supernova. It is a simple calculation to find how far the gas and dust sheets are from the supernova. Consequently, we can calculate how long it should take light to get from the supernova to the sheets, and how long the peak intensity should take to pass. The problem with the radioactive decay rate is that this would have been faster if the speed of light was higher. This would lead to a shorter half-life than the light-intensity curve revealed. For example, if c were 10 times its current value (c now), the half-life would be only 1/10th of what it is today, so the light-intensity curve should decay in 1/10th of the time it takes today. In a similar fashion, it might be expected that if c was 10c now at the supernova, the light should have illuminated the sheets and formed the rings in only 1/10th of the time at today's speed. Unfortunately, or so it seems, both the light intensity curve and the timing of the appearance of the rings (and their disappearance) are in accord with a value for c equal to c now. Therefore it is assumed that this is the proof needed that c has not changed since light was emitted from the LMC, some 170,000 light years away. However, there is one factor that negates this conclusion for both these features of SN1987A. Let us accept, for the sake of illustration, that c WAS equal to 10c now at the LMC at the time of the explosion. Furthermore, according to the c decay (cDK) hypothesis, light-speed is the same at any instant right throughout the cosmos due to the properties of the physical vacuum. Therefore, light will always arrive at earth with the current value of c now. This means that in transit, light from the supernova has been slowing down. By the time it reaches the earth, it is only travelling at 1/10th of its speed at emission by SN1987A. As a consequence the rate at which we are receiving information from that light beam is now 1/10th of the rate at which it was emitted. In other words we are seeing this entire event in slow-motion. The light-intensity curve may have indeed decayed 10 times faster, and the light may indeed have reached the sheets 10 times sooner than expected on constant c. Our dilemma is that we cannot prove it for sure because of the slow-motion effect. At the same time this cannot be used to disprove the cDK hypothesis. As a consequence other physical evidence is needed to resolve the dilemma. This is done in "Zero Point Energy and the Redshift" as presented at the NPA Conference, June 2010, where it is shown that the redshift of light from distant galaxies gives a value for c at the moment of emission. By way of clarification, at NO time have I ever claimed the apparent superluminal expansion of quasar jets verify higher values of c in the past. The slow-motion effect discussed earlier rules that out absolutely. The standard solution to that problem is accepted here. The accepted distance of the sheets of matter from the supernova is also not in question. That is fixed by angular measurement. What IS affected by the slow motion effect is the apparent time it took for light to get to those sheets from the supernova, and the rate at which the light-rings on those sheets grew. Additional Note: In order to clarify some confusion on the SN1987A issue and light-speed, let me give another illustration that does not depend on the geometry of triangles etc. Remember, distances do not change with changing light-speed. Even though it is customary to give distances in light-years (LY), that distance is fixed even if light-speed is changing. To start, we note that it has been established that the distance from SN1987A to the sheet of material that reflected the peak intensity of the light burst from the SN, is 2 LY, a fixed distance. Imagine that this distance is subdivided into 24 equal light-months (LM). Again the LM is a fixed distance. Imagine further that as the peak of the light burst from the SN moved out towards the sheet of material, it emitted a pulse in the direction of the earth every time it passed a LM subdivision. After 24 LM subdivisions the peak burst reached the sheet. Let us assume that there is no substantive change in light-speed from the time of the light-burst until the sheet becomes illuminated. Let us further assume for the sake of illustration, that the value of light-speed at the time of the outburst was 10c now. This means that the light-burst traversed the DISTANCE of 24 LM or 2 LY in a TIME of just 2.4 months. It further means that as the travelling light-burst emitted a pulse at each 1 LM subdivision, the series of pulses were emitted 1/10th month apart IN TIME. However, as this series of pulses travelled to earth, the speed of light slowed down to its present value. It means that the information contained in those pulses now passes our earth-bound observers at a rate that is 10 times slower than the original event. Accordingly, the pulses arrive at earth spaced one month apart in time. Observers on earth assume that c is constant since the pulses were emitted at a DISTANCE of 1 LM apart and the pulses are spaced one month apart in TIME. The conclusion is that this slow-motion effect makes it impossible to find the value of c at the moment of emission by this sort of process. By a similar line of reasoning, superluminal jets from quasars can be shown to pose just as much of a problem on the variable c model as on conventional theory. The standard explanation therefore is accepted here.

Setterfield: Thanks for the question, its an old one. You have assumed in your question that other atomic constants have in fact remained constant as c has dropped with time. This is not the case. In our 1987 Report, Trevor Norman and I pointed out that a significant number of other atomic constants have been measured as changing lock-step with c during the 20th century. This change is in such a way that energy is conserved during the cDK process. All told, our Report lists 475 measurements of 11 other atomic quantities by 25 methods in dynamical time. This has the consequence that in the standard equation [E = mc2] the energy E from any reaction is unchanged (within a quantum interval - which is the case in the example under discussion here). This happens because the measured values of the rest-mass, m, of atomic particles reveal that they are proportional to 1/(c2). The reason why this is so, is fully explored in Reviewing the Zero Point Energy. Therefore in reactions from known transitions, such as occurred in SN1987A with the emission of gamma rays and neutrinos, the emission energy will be unchanged for a given reaction. I trust this reply is adequate. (1/21/99)

Setterfield: It really does appear as if the Professor has not done his homework properly on the cDK (or DSLM) issue that he discussed in relation to Super Nova 1987 A. He pointed out that neutrinos gave the first signal that the star was exploding, and that neutrinos are now known to have mass. He then goes on to state (incorrectly) that neutrinos would have an increasing rest mass (or rest energy) as we go BACK into history. He then asks "if we believe in the conservation of energy, where has all this energy gone?" He concluded that this energy must have been radiated away and so should be detectable. Incredibly, the Professor has got the whole thing round the wrong way. If he had read our 1987 Report, he would have realised that the observational data forced us to conclude that with cDK there is also conservation of energy. As the speed of light DECREASES with time, the rest mass will INCREASE with time. This can be seen from the Einstein relation [E = mc2]. For Energy E to remain constant, the rest mass m will INCREASE with time in proportion to [1/ (c2)] as c is dropping. This INCREASE in rest-mass with time has been experimentally supported by the data as listed in Table 14 of our 1987 Report. There is thus no surplus energy to radiate away at all, contrary to the Professor's suggestion, and the rest-mass problem that he poses will also disappear. In a similar way, light photons would not radiate energy in transit as their speed drops. According to experimental evidence from the early 20th century when c was measured as varying, it was shown that wavelengths, [w], of light in transit are unaffected by changes in c. Now the speed of light is given by [c = fw] where [f] is light frequency. It is thus apparent that as [c] drops, so does the frequency [f], as [w] is unchanged. The energy of a light photon is then given by [E = hf] where [h] is Planck's constant. Experimental evidence listed in Tables 15A and 15B in the 1987 Report as well as the theoretical development shows that [h] is proportional to [1/c] so that [hc] is an absolute constant. This latter is supported by evidence from light from distant galaxies. As a result, since [h] is proportional to [1/c] and [f] is proportional to [c], then [E = hf] must be a constant for photons in transit. Thus there is no extra radiation to be emitted by photons in transit as light-speed slows down, contrary to the Professor's suggestion, as there is no extra energy for the photon to get rid of. I hope that this clarifies the matter. I do suggest that the 1987 Report be looked at in order to see what physical quantities were changing, and in what way, so that any misunderstanding of the real situation as given by observational evidence can be avoided. "Reviewing the Zero Point Energy" should also be of help.

Question: What about SN1997ff? Setterfield: There has been much interest generated in the press lately over the analysis by Dr. Adam G. Riess and Dr. Peter E. Nugent of the decay curve of the distant supernova designated as SN 1997ff. In fact, over the past few years, a total of four supernovae have led to the current state of excitement. The reason for the surge of interest is the distances that these supernovae are found to be when compared with their redshift, z. According to the majority of astronomical opinion, the relationship between an object's distance and its redshift should be a smooth function. Thus, given a redshift value, the distance of an object can be reasonably estimated. One way to check this is to measure the apparent brightness of an object whose intrinsic luminosity is known. Then, since brightness falls off by the inverse square of the distance, the actual distance can be determined. For very distant objects something of exceptional brightness is needed. There are such objects that can be used as 'standard candles', namely supernovae of Type Ia. They have a distinctive decay curve for their luminosity after the supernova explosion, which allows them to be distinguished from other supernovae. In this way, the following four supernovae have been examined as a result of

photos taken by the Hubble Space Telescope. SN 1997ff at z = 1.7; SN 1997fg at z

= 0.95; SN 1998ef at z = 1.2; and SN 1999fv also at z = 1.2. The higher the

redshift z, the more distant the object should be. Two years ago, the supernovae

at z = 0.95 and z = 1.2 attracted attention because they were FAINTER and hence

further away than expected. This led to two main competing theories among

cosmologists. First, that the faintness was due to dust, or second, that the

faintness was due to Einstein’s cosmological constant – a kind of negative

gravity expanding the universe progressively faster than if the expansion was

due solely to the Big Bang.

The cosmological constant has been invoked sporadically since the time of Einstein as the answer to a number of problems. It is sometimes called the "false vacuum energy." However, in stating this, it should be pointed out, as Haisch and others have done, that the cosmological constant has nothing to do with the zero-point energy. This cosmological constant, lambda, is frequently used in various models of the Big Bang, to describe its earliest moments. It has been a mathematical device used by some cosmologists to inflate the universe dramatically, and then have lambda drop to zero. It now appears that it would be helpful if lambda maintained its prominence in the history of the cosmos to solve more problems. Whether it is the real answer is another matter. Nevertheless, it is a useful term to include in some cosmological equations to avoid embarrassment. At this point, the saga takes another turn. Recent work reveals that the object SN1997ff, the most distant of the four, turns out to be BRIGHTER than expected for its redshift value. This event has elicited the following comments from Adrian Cho in New Scientist for 7 April, 2001, page 6 in an article entitled "What's the big rush?"

Well, that is one option now that dust has been eliminated as a suspect. However, the answer could also lie in a different direction to that suggested above as there is another option well supported by other observational evidence. For the last two decades, astronomer William Tifft of Arizona has pointed out repeatedly that the redshift is not a smooth function at all but is, in fact, going in "jumps", or is quantised. In other words, it proceeds in a steps and stairs fashion. Tifft's analyses were disputed, so in 1992 Guthrie and Napier did a study to disprove the matter. They ended up agreeing with Tifft. The results of that study were themselves disputed, so Guthrie and Napier conducted an exhaustive analysis on a whole new batch of objects. Again, the conclusions confirmed Tifft's contention. The quantisations of the redshift that were noted in these studies were on a relatively small scale, but analysis revealed a basic quantisation that was at the root of the effect, of which the others were simply higher multiples. However, this was sufficient to indicate that the redshift was probably not a smooth function at all. If these results were accepted, then the whole interpretation of the redshift, namely that it represented the expansion of the cosmos by a Doppler effect on light waves, was called into question. This becomes apparent since there was no good reason why that expansion should go in a series of jumps, anymore than cars on a highway should travel only in multiples of, say, 5 kilometres per hour. However, a periodicity on a much larger scale has also been noted for very distant objects. In 1990, Burbidge and Hewitt reviewed the observational history of these preferred redshifts. Objects were clumping together in preferred redshifts across the whole sky. These redshifts were listed as z = 0.061, 0.30, 0.60, 0.96, 1.41, 1.96, 2.63 and 3.45 [G. Burbidge and A. Hewitt, Astrophysical Journal, vol. 359 (1990), L33]. In 1992, Duari et al. examined 2164 objects with redshifts ranging out to z = 4.43 in a statistical analysis [Astrophysical Journal, vol. 384 (1992), 35], and confirmed these redshift peaks listed by Burbidge and Hewitt. This sequence has also been described accurately by the Karlsson formula. Thus two phenomena must be dealt with, both the quantisation effect itself and the much larger periodicities which mean objects are further away than their redshifts would indicate. Apparent clustering of galaxies is due to this large-scale periodicity. A straightforward interpretation of both the quantisation and periodicity is that the redshift itself is going in a predictable series of steps and stairs on both a small as well as a very large scale. This is giving rise to the apparent clumping of objects at preferred redshifts. The reason is that on the flat portions of the steps and stairs pattern, the redshift remains essentially constant over a large distance, so many objects appear to be at the same redshift. By contrast, on the rapidly rising part of the pattern, the redshift changes dramatically over a short distance, and so relatively few objects will be at any given redshift in that portion of the pattern.These considerations are important in the current context. As noted above by Reiss, the objects at z = 0.95 and z = 1.2 are systematically faint for their assumed redshift distance. By contrast, the object at z = 1.7 is unusually bright for its assumed redshift distance. Notice that the object at z = 0.95 is at the middle of the flat part of the step according to the redshift analyses, while z = 1.2 is right at the back of the step, just before the steep climb. Consequently for their redshift value, they will be further away in distance than expected, and will therefore appear fainter. By contrast, the object at z = 1.7 is on the steeply rising part of the pattern. Because the redshift is changing rapidly over a very short distance astronomically speaking, the object will be assumed to be further away than it actually is and will thus appear to be brighter than expected. When interpreted this way, these recent results support the existence of the redshift periodicities noted by Burbidge and Hewitt, statistically confirmed by Duari et al., and described by the Karlsson formula. In so doing, they also imply that redshift behaviour is not a smooth function, but rather goes in a steps and stairs pattern. If this is accepted, it means that the redshift is not a measure of universal expansion, but must have some other interpretation. The research that has been conducted on the changing speed of light over the last 10 years has been able to replicate both the basic quantisation picked up by Tifft, and the large-scale periodicities that are in evidence here. On this research, the redshift and light-speed are related effects that mutually derive from changing vacuum conditions. The evidence suggests that the vacuum zero-point energy (ZPE) is increasing as a result of initial expansion of the cosmos. It has been shown by Puthoff [Physical Review D 35:10 (1987), 3266] that the ZPE is maintaining all atomic structures throughout the universe. Therefore, as the ZPE increases, the energy available to maintain atomic orbits increases. Once a quantum threshold has been reached, every atom in the cosmos will assume a higher energy state for a given orbit and so the light emitted from those atoms will be bluer than those in the past. Therefore as we look back to distant galaxies, the light emitted from them will appear redder in quantised steps. At the same time, since the speed of light is dependent upon vacuum conditions, it can be shown that a smoothly increasing ZPE will result in a smoothly decreasing light-speed. Although the changing ZPE can be shown to be the result of the initial expansion of the cosmos, the fact that the quantised effects are not "smeared out" also indicate that the cosmos is now essentially static, just as Narliker and Arp have demonstrated [Astrophysical Journal vol. 405 (1993), 51]. In view of the dilemma that confronts astronomers with these supernovae, this observational alternative may be worth serious examination.

Setterfield: If a redshift is due to motion, it is not quantized; it is a smooth smearing depending on the velocity. There is not a quantized effect. We see this smooth smearing in velocities of stars, rotation of stars, and the movement of stars within galaxies. What happens is that the wavelength of the photon is stretched or contracted due to the velocity at the time of emission. Therefore the fact that the photon originates as a discrete packet of energy is irrelevent. The point that needs to be made is that in distant galaxies, photons of light have been emitted with a range of wavelengths. All these wavelengths are simultaneously shifted in jumps by the same fraction, and it is these jumps which Tifft has noted, and which are not indicative of a Doppler shift. So some other effect is at work. Slow motion effects?

Setterfield: Since many atomic processes are faster proportional to c, but the slow motion effect at the point of reception is also operating, the combined overall result is that everything seems to proceed at the same unchanged pace. For example, Supernova 1987A involves the radioactive decay of Cobalt 56. Since this is an atomic process, this was decaying much faster when the speed of light was higher. However, this is exactly offset by the slow motion effect when that signal comes to earth. As a consequence, the decay of Cobalt 56 in Supernova 1987A seems to have the same half-life then as it has now. Therefore no astronomical evidence for a slow motion effect in atomic processes would be expected.

Setterfield: When George Gamow (1949) proposed a beginning to the expansion which was the accepted explanation for the redshift, Hoyle derisively called it the "Big Bang." Nothing exploded or banged in Gamow's idea, though; there was simply a hot, dense beginning from which everything expanded. Hoyle put up a different model in which matter was continuously being originated. The major objection to Gamow's "Big Bang" was that it was too close to the biblical model of creation! In fact, even up to 1985 the Cambridge Atlas of Astronomy (pp 381,384) referred to the "Big Bang" as the 'theory of sudden creation.' On 10th August, 1989, Dr. John Maddocks, editor of Nature, declared the Big Bang philosophically unacceptable in an article entitled “Down with the Big Bang” (Nature, vol. 340, p. 425) So what is the difference between the BB and the biblical model? Essentially naturalism. The Bible says God did it and secular science says it somehow just happened. However the Bible does say, twelve times, that God stretched the heavens. So that expansion is definitely in the Bible.In the meantime, the steady state theory was effectively disproved by quasars being discovered in the mid '60's. It is interesting that Gamow's two young colleagues, Ralph Alpher and Robert

Herman, predicted in a 1948 letter to Nature that the CBR

temperature should found to be about 5

deg K. [ Nature,

162,774.] They predicted 10-15 degrees K. The background

radiation was found, but at a much lower temperature.

The glitch for the BB right now in terms of a continuously expanding universe is the presence of the quantized redshift. On May 5th and 7th of this year, two abstracts in astrophysics were published.* In the second one, Morley Bell writes: "Evidence was presented recently suggesting that [galaxy] clusters studied by the Hubble Key Project may contain quantized intrinsic redshift components that are related to those reported by Tifft. Here we report the results of a similar analysis using 55 spiral ... and 36 Type Ia supernovae galaxies. We find that even when many more objects are included in the sample there is still clear evidence that the same quantized intrinsic redshifts are present..." This is indication that the redshift might not be a Doppler effect. Back in

1929, Hubble himself had some doubts about connecting the two. The redshift

number is obtained by comparing the light received with the standard

laboratory measurements for whatever element is being seen. So this is simply

a difference between two measurements and there is no intrinsic connection

between the redshift measurement and velocity. In fact it has been noted that

at the center of the Virgo cluster the high velocity of the galaxies wipes out

the quantization of the redshift.

Interestingly, when the Bible speaks of God stretching the heavens, eleven of the twelve times the verb translated "stretched" is in the past completed tense. There is another possible cause for the quantized redshift, which is explored

in Zero Point Energy and the Redshift. Essentially, it has to do with the

increasing zero point energy, as measured by Planck's constant. The

statistics regarding that are in his earlier major paper, here.

As far as the cosmic background radiation is concerned, the first point to note is that its temperature is much lower than that initially suggested by Gamow. This leaves some room for doubt as to whether or not this is the effect he was predicting. It is possible that even in the creation scenario, with rapid expansion from an initial super-dense state, that this effect would still be seen. However, there is another explanation which has surfaced in the last year or so. It has to do with the zero point energy. In the same way that the ZPE allows for the manifestation of virtual particle pairs, such electron/positron pairs, it is also reasonable to propose that the ZPE would allow the formation of virtual tachyon pairs. Calculation has shown that the energy density of the radiation from these tachyon pairs has the same profile as that of the cosmic microwave background. So that is another point to consider. As far as the abundance of elements is concerned, there are several anomalies existing with current BB theory. Gamow originally proposed the building up of all elements in his BB scenario. However a blockage was found in that process which was difficult to overcome. As a result, Hoyle, Burbidge, and Fowler examined the possibility of elements being built up within stars, which later exploded and spread them out among the intergalactic clouds. This proposal is now generally accepted. However, it leads to a number of problems, such as the anomalous abundance of iron in the regions around quasars. There are other problems, such as anomalous groups of stars near the center of our galaxy and the Andromeda galaxy which have high metal abundances. Because of the current approach using the production of these elements in the first generation of stars, this process obviously takes time. As a consequence, these anomalous stars can only be accounted for by collisions or cannibalization of smaller star systems by larger galaxies. There is another possible answer, however, which creationists need to consider. It has been shown that in a scenario with small black holes, such as Planck particles, the addition of a proton to the system or to a system with a negatively-charged black hole, the build-up of elements becomes possible. The blockage that element formation in stars was designed to overcome is eliminated, because neutrons can also be involved, as can alpha particles. As a consequence, is it possible to build up other elements than hydrogen and helium in the early phases of the universe. This may happen in local concentrations where negative black holes formed by the agglomeration of Planck Particles exist. Stars that form in those areas would then have apparently anomalous metal abundances. Importantly, in this scenario, if Population II stars were formed on Day 1 of Creation Week, as suggested by Job 38, and Population I stars were formed half-way through day 4, as listed in Genesis 1:14, we have a good reason why the Population I stars contain more metals than the Population II stars, as this process from the agglomeration of black holes would have had time to act. Regarding

distance and age of galaxies: There is no argument that distance indicates

age. This should be stated first. It was this very fact that the further out

we looked, the more different the universe appeared, that caused the downfall

of the Steady State model. Specifically, it was the discovery of quasars that

produced this result. Importantly, quasars become brighter and more numerous

the further out we look. At a redshift of around 1.7, their numbers and

luminosity appear to plateau. Closer in from 1.7, their numbers and intensity

decline. Furthermore, a redshift of 1.7 is also an important marker for the

formation of stars. We notice starburst galaxies of increasing activity as we

go back to a redshift of 1.7. At that point, star formation activity appears

to reach a maximum where young, hot blue stars of Population I are being

formed (therefore emitting higher amounts of UV radiation). At a redshift of

1.7, the redshift/distance relationship also undergoes a major change. The

curve steepens up considerably as we go back from that point. This has caused

current BB thinking to introduce some extra terms into their equations which

would indicate that the rate of expansion of the cosmos has speeded up as we

come forward in time from that point. On the lightspeed scenario, a redshift

of 1.7 effectively marks the close of Creation Week, and so all of these above

effects would be expected to taper off after that time.

* Astrophysics, abstract astro-ph/0305060 and Astrophysics, abstract astro-ph/0305112 Wandering Planets?

Setterfield: The idea of the changes in orbits initially came from the work done by Velikovsky. While his data collection is remarkable, I disagree with his conclusions. For example, he talks about planet Venus in a wandering orbit, causing some catastrophes here on earth. One of the problems with this, as with all similar proposals, is that the planet Venus lies in the plane of the ecliptic -- the same as all other planets except Pluto -- while comets and similar wandering bodies move above and below the plane of the ecliptic. Furthermore, as the orbit of a wandering Venus eventually stabilized around the sun, it would still be highly elliptical. By contrast, the orbit of Venus is the most nearly circular of all the planetary orbits. Consequently, it is the least likely to have been a wanderer in the past. A similar statement may be made about Mars, although its orbit is somewhat more elliptical than the Earth's, but not nearly as elliptical as Pluto's.